Ahmet Dundar Sezer

All-Digital LoS MIMO with Low-Precision Analog-to-Digital Conversion

Aug 02, 2021

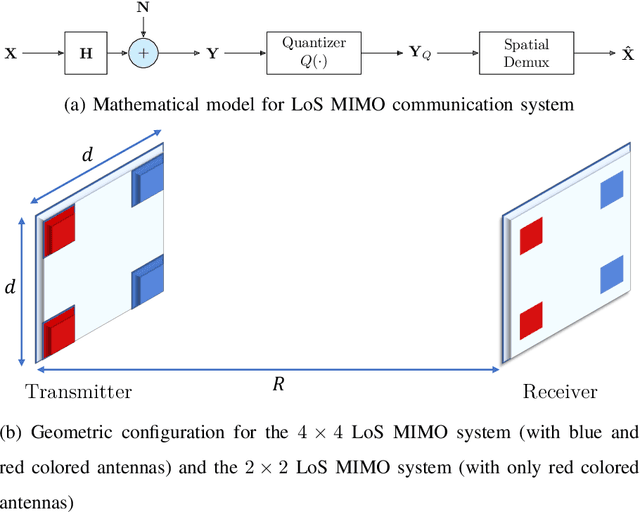

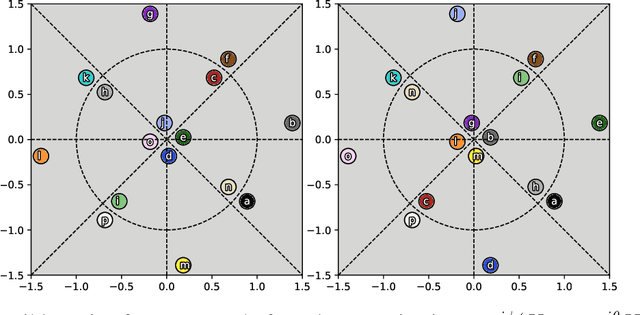

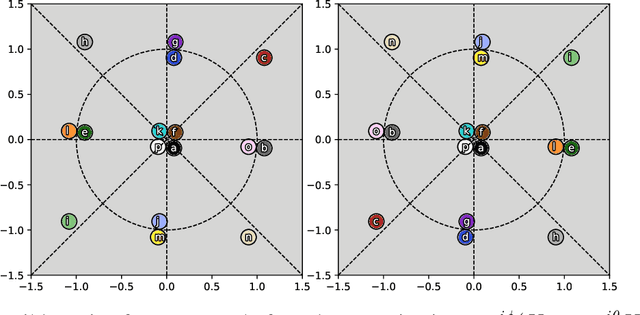

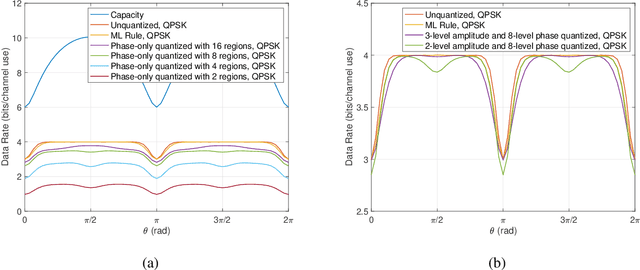

Abstract:Line-of-sight (LoS) multi-input multi-output (MIMO) systems exhibit attractive scaling properties with increase in carrier frequency: for a fixed form factor and range, the spatial degrees of freedom increase quadratically for 2D arrays, in addition to the typically linear increase in available bandwidth. In this paper, we investigate whether modern all-digital baseband signal processing architectures can be devised for such regimes, given the difficulty of analog-to-digital conversion for large bandwidths. We propose low-precision quantizer designs and accompanying spatial demultiplexing algorithms, considering 2x2 LoS MIMO with QPSK for analytical insight, and 4x4 MIMO with QPSK and 16QAM for performance evaluation. Unlike prior work, channel state information is utilized only at the receiver (i.e., transmit precoding is not employed). We investigate quantizers with regular structure whose high-SNR mutual information approaches that of an unquantized system. We prove that amplitude-phase quantization is necessary to attain this benchmark; phase-only quantization falls short. We show that quantizers based on maximizing per-antenna output entropy perform better than standard Minimum Mean Squared Quantization Error (MMSQE) quantization. For spatial demultiplexing with severely quantized observations, we introduce the novel concept of virtual quantization which, combined with linear detection, provides reliable demodulation at significantly reduced complexity compared to maximum likelihood detection.

Sparse Coding Frontend for Robust Neural Networks

Apr 12, 2021

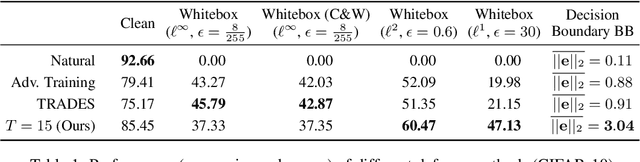

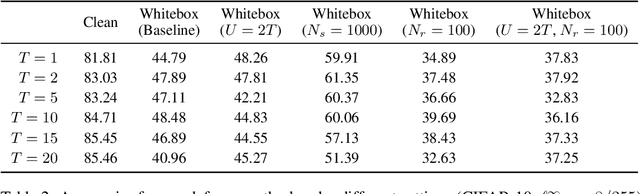

Abstract:Deep Neural Networks are known to be vulnerable to small, adversarially crafted, perturbations. The current most effective defense methods against these adversarial attacks are variants of adversarial training. In this paper, we introduce a radically different defense trained only on clean images: a sparse coding based frontend which significantly attenuates adversarial attacks before they reach the classifier. We evaluate our defense on CIFAR-10 dataset under a wide range of attack types (including Linf , L2, and L1 bounded attacks), demonstrating its promise as a general-purpose approach for defense.

A Neuro-Inspired Autoencoding Defense Against Adversarial Perturbations

Dec 21, 2020

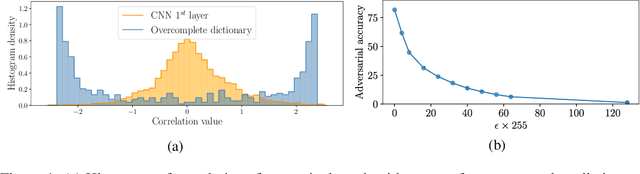

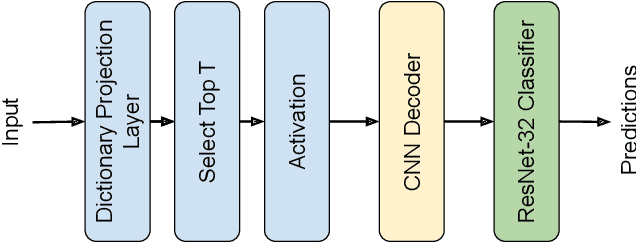

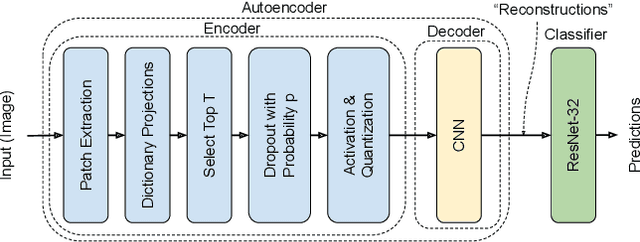

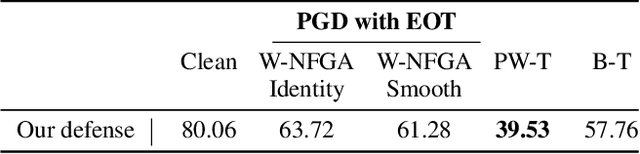

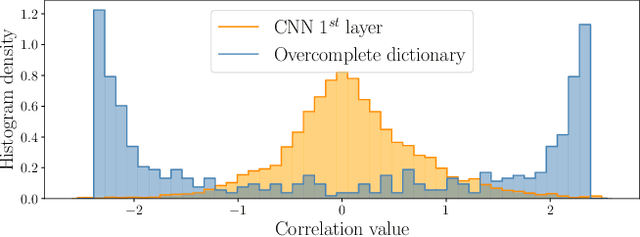

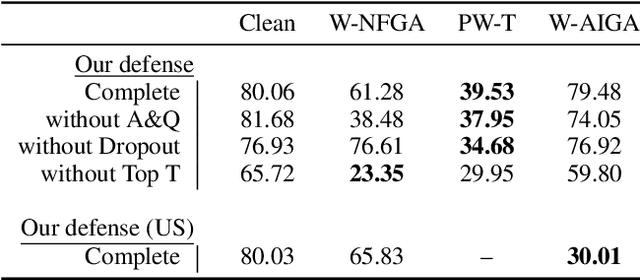

Abstract:Deep Neural Networks (DNNs) are vulnerable to adversarial attacks: carefully constructed perturbations to an image can seriously impair classification accuracy, while being imperceptible to humans. While there has been a significant amount of research on defending against such attacks, most defenses based on systematic design principles have been defeated by appropriately modified attacks. For a fixed set of data, the most effective current defense is to train the network using adversarially perturbed examples. In this paper, we investigate a radically different, neuro-inspired defense mechanism, starting from the observation that human vision is virtually unaffected by adversarial examples designed for machines. We aim to reject L^inf bounded adversarial perturbations before they reach a classifier DNN, using an encoder with characteristics commonly observed in biological vision: sparse overcomplete representations, randomness due to synaptic noise, and drastic nonlinearities. Encoder training is unsupervised, using standard dictionary learning. A CNN-based decoder restores the size of the encoder output to that of the original image, enabling the use of a standard CNN for classification. Our nominal design is to train the decoder and classifier together in standard supervised fashion, but we also consider unsupervised decoder training based on a regression objective (as in a conventional autoencoder) with separate supervised training of the classifier. Unlike adversarial training, all training is based on clean images. Our experiments on the CIFAR-10 show performance competitive with state-of-the-art defenses based on adversarial training, and point to the promise of neuro-inspired techniques for the design of robust neural networks. In addition, we provide results for a subset of the Imagenet dataset to verify that our approach scales to larger images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge