Abhishek K Dubey

Computer-aided abnormality detection in chest radiographs in a clinical setting via domain-adaptation

Dec 19, 2020

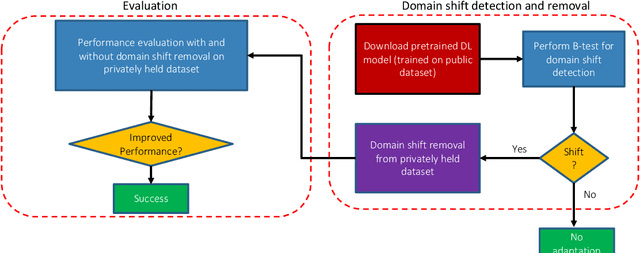

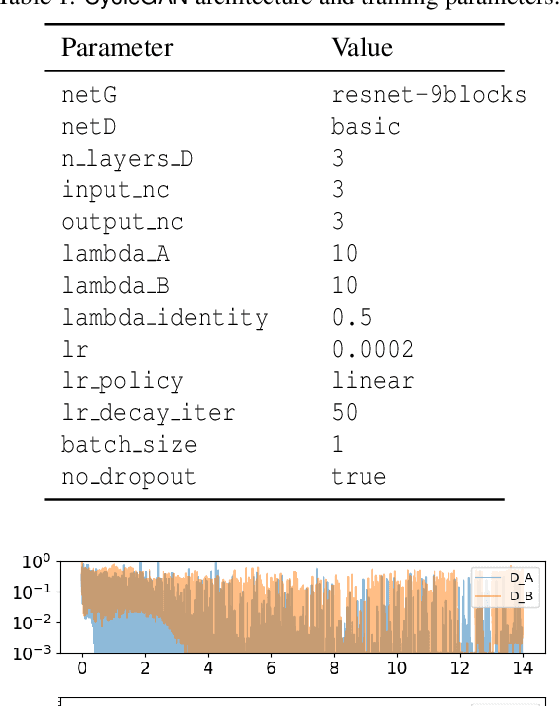

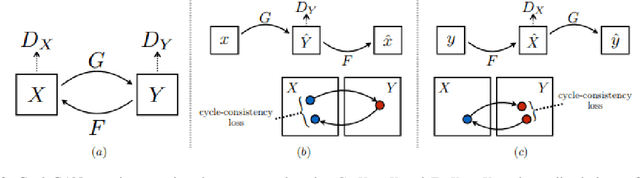

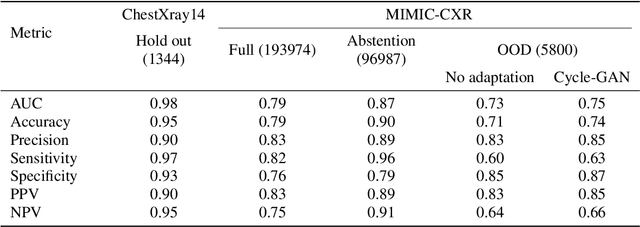

Abstract:Deep learning (DL) models are being deployed at medical centers to aid radiologists for diagnosis of lung conditions from chest radiographs. Such models are often trained on a large volume of publicly available labeled radiographs. These pre-trained DL models' ability to generalize in clinical settings is poor because of the changes in data distributions between publicly available and privately held radiographs. In chest radiographs, the heterogeneity in distributions arises from the diverse conditions in X-ray equipment and their configurations used for generating the images. In the machine learning community, the challenges posed by the heterogeneity in the data generation source is known as domain shift, which is a mode shift in the generative model. In this work, we introduce a domain-shift detection and removal method to overcome this problem. Our experimental results show the proposed method's effectiveness in deploying a pre-trained DL model for abnormality detection in chest radiographs in a clinical setting.

Model Reduction of Shallow CNN Model for Reliable Deployment of Information Extraction from Medical Reports

Jul 31, 2020

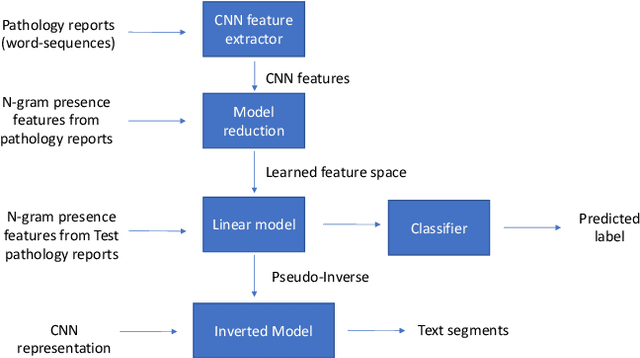

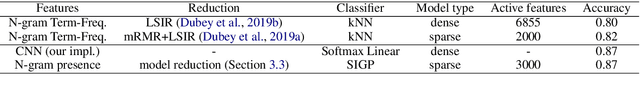

Abstract:Shallow Convolution Neural Network (CNN) is a time-tested tool for the information extraction from cancer pathology reports. Shallow CNN performs competitively on this task to other deep learning models including BERT, which holds the state-of-the-art for many NLP tasks. The main insight behind this eccentric phenomenon is that the information extraction from cancer pathology reports require only a small number of domain-specific text segments to perform the task, thus making the most of the texts and contexts excessive for the task. Shallow CNN model is well-suited to identify these key short text segments from the labeled training set; however, the identified text segments remain obscure to humans. In this study, we fill this gap by developing a model reduction tool to make a reliable connection between CNN filters and relevant text segments by discarding the spurious connections. We reduce the complexity of shallow CNN representation by approximating it with a linear transformation of n-gram presence representation with a non-negativity and sparsity prior on the transformation weights to obtain an interpretable model. Our approach bridge the gap between the conventionally perceived trade-off boundary between accuracy on the one side and explainability on the other by model reduction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge