Aayush Nandkeolyar

MLPro: A System for Hosting Crowdsourced Machine Learning Challenges for Open-Ended Research Problems

Apr 04, 2022

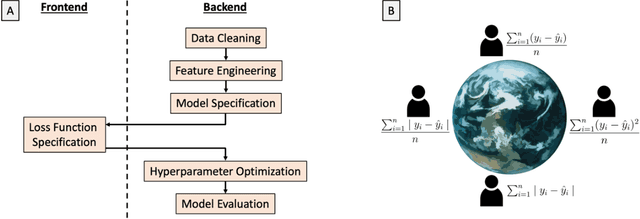

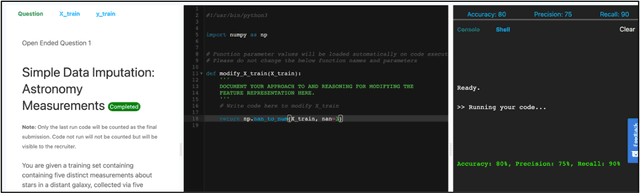

Abstract:The task of developing a machine learning (ML) model for a particular problem is inherently open-ended, and there is an unbounded set of possible solutions. Steps of the ML development pipeline, such as feature engineering, loss function specification, data imputation, and dimensionality reduction, require the engineer to consider an extensive and often infinite array of possibilities. Successfully identifying high-performing solutions for an unfamiliar dataset or problem requires a mix of mathematical prowess and creativity applied towards inventing and repurposing novel ML methods. Here, we explore the feasibility of hosting crowdsourced ML challenges to facilitate a breadth-first exploration of open-ended research problems, thereby expanding the search space of problem solutions beyond what a typical ML team could viably investigate. We develop MLPro, a system which combines the notion of open-ended ML coding problems with the concept of an automatic online code judging platform. To conduct a pilot evaluation of this paradigm, we crowdsource several open-ended ML challenges to ML and data science practitioners. We describe results from two separate challenges. We find that for sufficiently unconstrained and complex problems, many experts submit similar solutions, but some experts provide unique solutions which outperform the "typical" solution class. We suggest that automated expert crowdsourcing systems such as MLPro have the potential to accelerate ML engineering creativity.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge