Aashi Jindal

Enhash: A Fast Streaming Algorithm For Concept Drift Detection

Nov 07, 2020

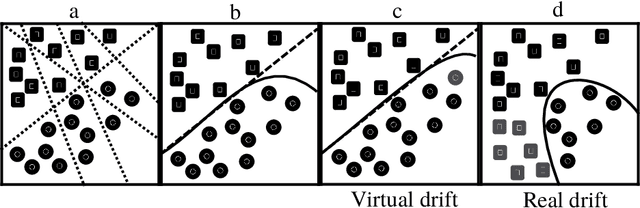

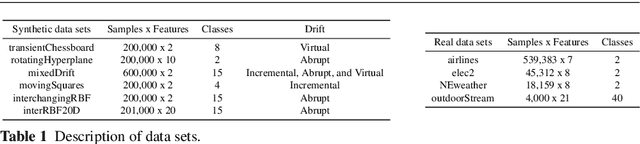

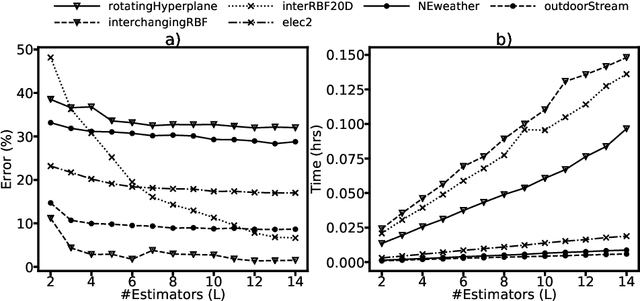

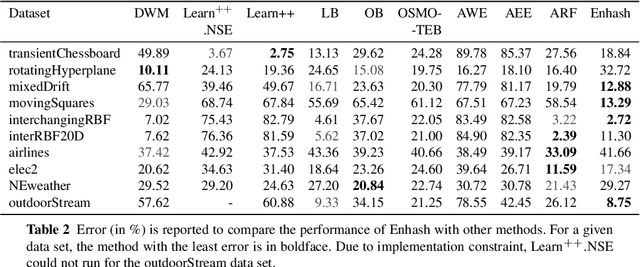

Abstract:We propose Enhash, a fast ensemble learner that detects \textit{concept drift} in a data stream. A stream may consist of abrupt, gradual, virtual, or recurring events, or a mixture of various types of drift. Enhash employs projection hash to insert an incoming sample. We show empirically that the proposed method has competitive performance to existing ensemble learners in much lesser time. Also, Enhash has moderate resource requirements. Experiments relevant to performance comparison were performed on 6 artificial and 4 real data sets consisting of various types of drifts.

Guided Random Forest and its application to data approximation

Sep 02, 2019

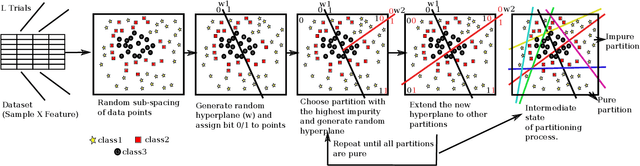

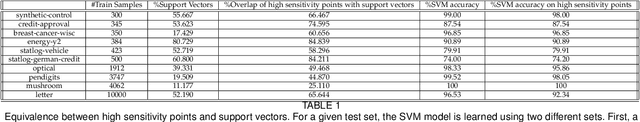

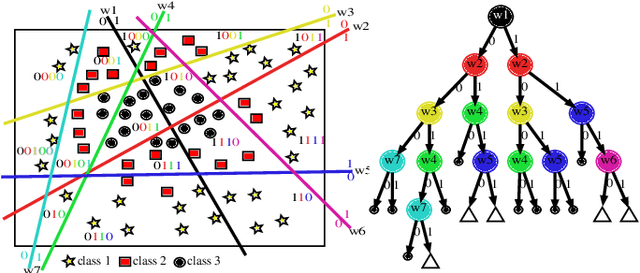

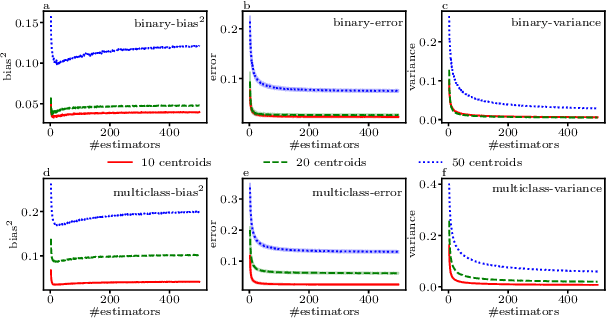

Abstract:We present a new way of constructing an ensemble classifier, named the Guided Random Forest (GRAF) in the sequel. GRAF extends the idea of building oblique decision trees with localized partitioning to obtain a global partitioning. We show that global partitioning bridges the gap between decision trees and boosting algorithms. We empirically demonstrate that global partitioning reduces the generalization error bound. Results on 115 benchmark datasets show that GRAF yields comparable or better results on a majority of datasets. We also present a new way of approximating the datasets in the framework of random forests.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge