Aadesh Neupane

Designing Behavior Trees from Goal-Oriented LTLf Formulas

Jul 12, 2023Abstract:Temporal logic can be used to formally specify autonomous agent goals, but synthesizing planners that guarantee goal satisfaction can be computationally prohibitive. This paper shows how to turn goals specified using a subset of finite trace Linear Temporal Logic (LTL) into a behavior tree (BT) that guarantees that successful traces satisfy the LTL goal. Useful LTL formulas for achievement goals can be derived using achievement-oriented task mission grammars, leading to missions made up of tasks combined using LTL operators. Constructing BTs from LTL formulas leads to a relaxed behavior synthesis problem in which a wide range of planners can implement the action nodes in the BT. Importantly, any successful trace induced by the planners satisfies the corresponding LTL formula. The usefulness of the approach is demonstrated in two ways: a) exploring the alignment between two planners and LTL goals, and b) solving a sequential key-door problem for a Fetch robot.

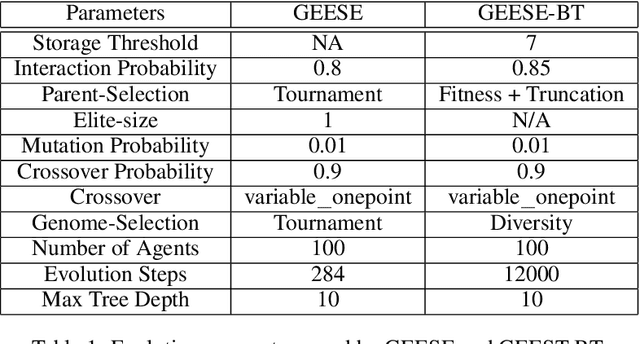

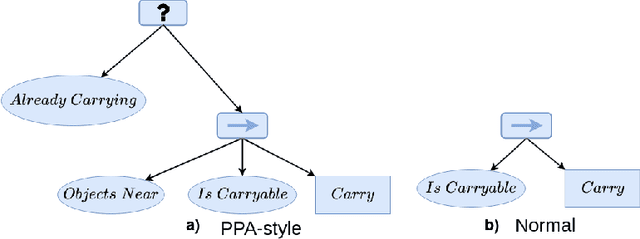

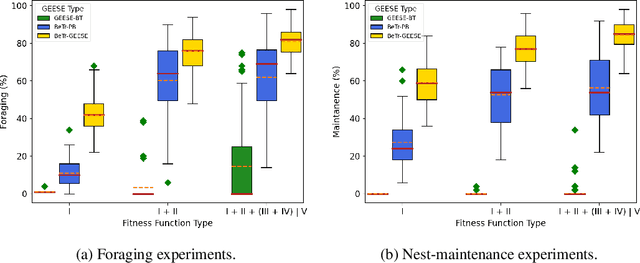

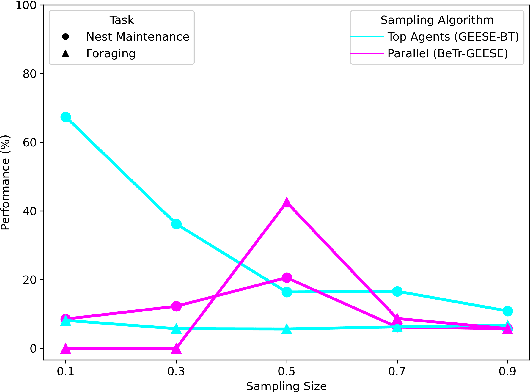

Efficiently Evolving Swarm Behaviors Using Grammatical Evolution With PPA-style Behavior Trees

Mar 29, 2022

Abstract:Evolving swarm behaviors with artificial agents is computationally expensive and challenging. Because reward structures are often sparse in swarm problems, only a few simulations among hundreds evolve successful swarm behaviors. Additionally, swarm evolutionary algorithms typically rely on ad hoc fitness structures, and novel fitness functions need to be designed for each swarm task. This paper evolves swarm behaviors by systematically combining Postcondition-Precondition-Action (PPA) canonical Behavior Trees (BT) with a Grammatical Evolution. The PPA structure replaces ad hoc reward structures with systematic postcondition checks, which allows a common grammar to learn solutions to different tasks using only environmental cues and BT feedback. The static performance of learned behaviors is poor because no agent learns all necessary subtasks, but performance while evolving is excellent because agents can quickly change behaviors in new contexts. The evolving algorithm succeeded in 75\% of learning trials for both foraging and nest maintenance tasks, an eight-fold improvement over prior work.

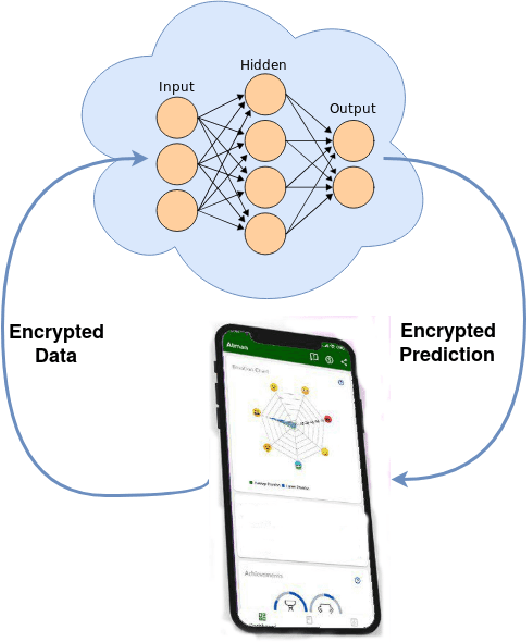

A brief history on Homomorphic learning: A privacy-focused approach to machine learning

Sep 11, 2020

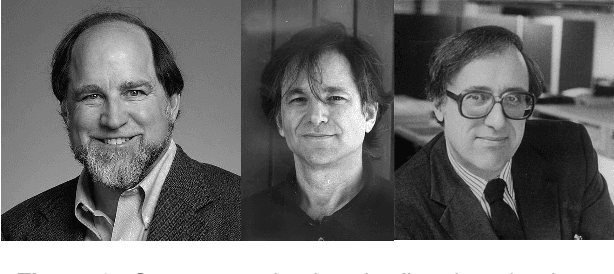

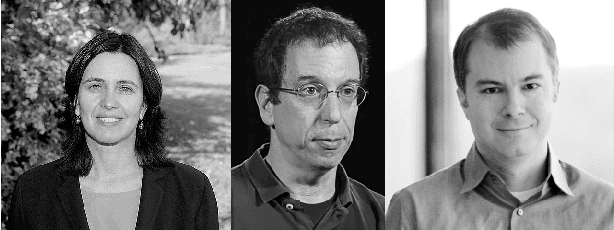

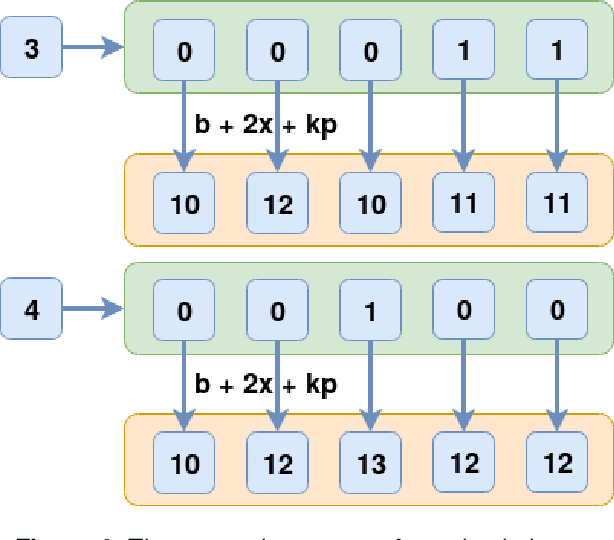

Abstract:Cryptography and data science research grew exponential with the internet boom. Legacy encryption techniques force users to make a trade-off between usability, convenience, and security. Encryption makes valuable data inaccessible, as it needs to be decrypted each time to perform any operation. Billions of dollars could be saved, and millions of people could benefit from cryptography methods that don't compromise between usability, convenience, and security. Homomorphic encryption is one such paradigm that allows running arbitrary operations on encrypted data. It enables us to run any sophisticated machine learning algorithm without access to the underlying raw data. Thus, homomorphic learning provides the ability to gain insights from sensitive data that has been neglected due to various governmental and organization privacy rules. In this paper, we trace back the ideas of homomorphic learning formally posed by Ronald L. Rivest and Len Alderman as "Can we compute upon encrypted data?" in their 1978 paper. Then we gradually follow the ideas sprouting in the brilliant minds of Shafi Goldwasser, Kristin Lauter, Dan Bonch, Tomas Sander, Donald Beaver, and Craig Gentry to address that vital question. It took more than 30 years of collective effort to finally find the answer "yes" to that important question.

Slogatron: Advanced Wealthiness Generator

Aug 09, 2018

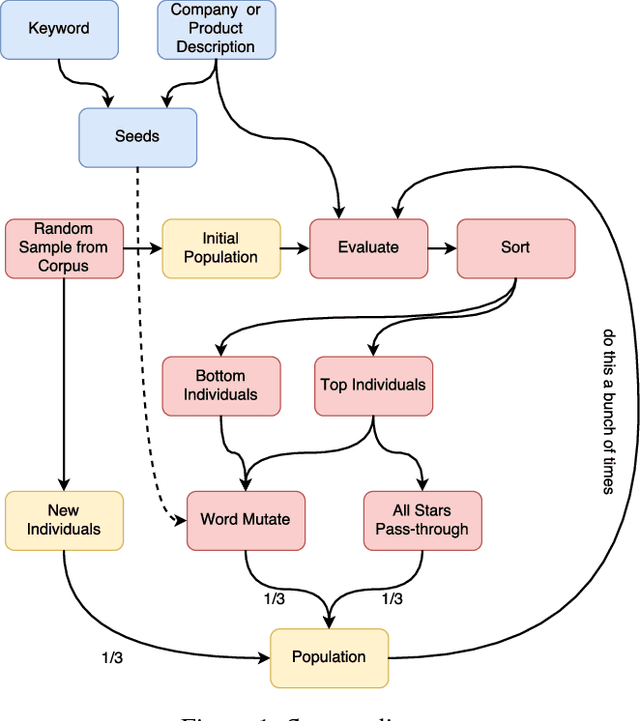

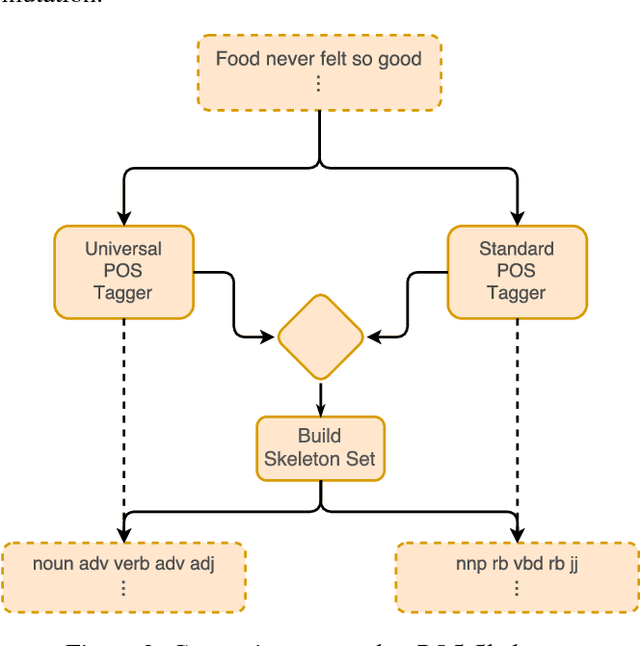

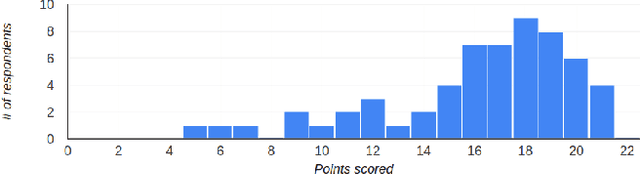

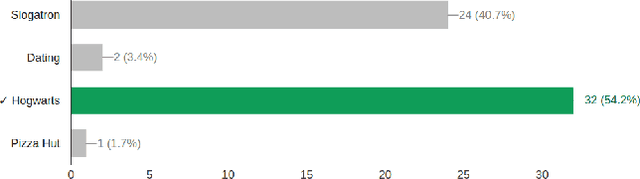

Abstract:Creating catchy slogans is a demanding and clearly creative job for ad agencies. The process of slogan creation by humans involves finding key concepts of the company and its products, and developing a memorable short phrase to describe the key concept. We attempt to follow the same sequence, but with an evolutionary algorithm. A user inputs a paragraph describing describing the company or product to be promoted. The system randomly samples initial slogans from a corpus of existing slogans. The initial slogans are then iteratively mutated and improved using an evolutionary algorithm. Mutation randomly replaces words in an individual with words from the input paragraphs. Internal evaluation measures a combination of grammatical correctness, and semantic similarity to the input paragraphs. Subjective analysis of output slogans leads to the conclusion that the algorithm certainly outputs valuable slogans. External evaluation found that the slogans were somewhat successful in conveying a message, because humans were generally able to select the correct promoted item given a slogan.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge