A. LaViers

An Embodied, Platform-invariant Architecture for Connecting High-level Spatial Commands to Platform Articulation

Mar 31, 2019

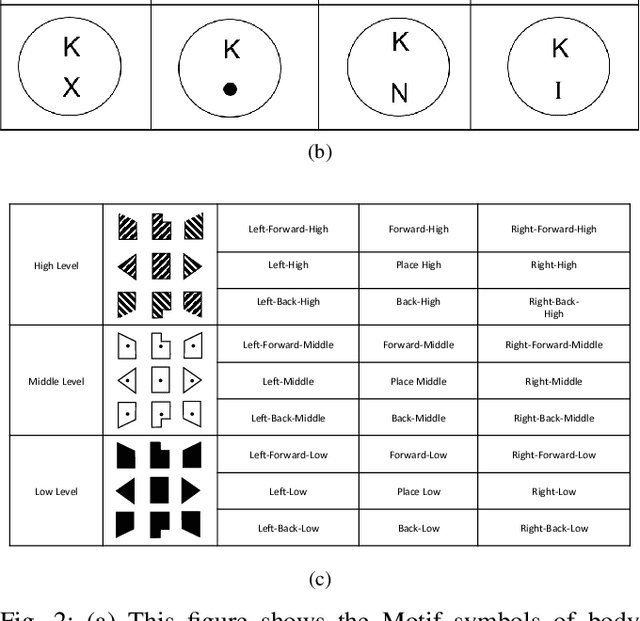

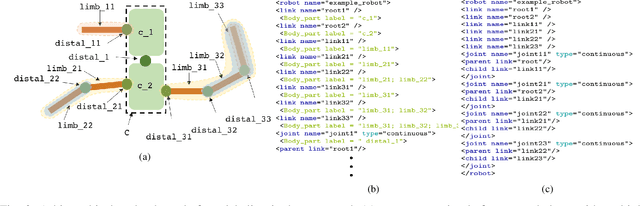

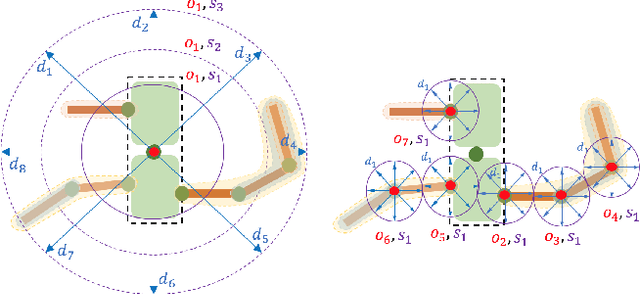

Abstract:In contexts such as teleoperation, robot reprogramming, human-robot-interaction, and neural prosthetics, conveying movement commands to a robotic platform is often a limiting factor. Currently, many applications rely on joint-angle-by-joint-angle prescriptions. This inherently requires a large number of parameters to be specified by the user that scales with the number of degrees of freedom on a platform, creating high bandwidth requirements for interfaces. This paper presents an efficient representation of high-level, spatial commands that specifies many joint angles with relatively few parameters based on a spatial architecture that is judged favorably by human viewers. In particular, a general method for labeling connected platform linkages, generating a databank of user-specified poses, and mapping between high-level spatial commands and specific platform static configurations are presented. Thus, this architecture is ``platform-invariant'' where the same high-level, spatial command can be executed on any platform. This has the advantage that our commands have meaning for human movers as well. In order to achieve this, we draw inspiration from Laban/Bartenieff Movement Studies, an embodied taxonomy for movement description. The architecture is demonstrated through implementation on 26 spatial directions for a Rethink Robotics Baxter, an Aldebaran NAO, and a KUKA youBot. User studies are conducted to validate the claims of the proposed framework.

An Information Theoretic Measure for Robot Expressivity

Jul 17, 2017

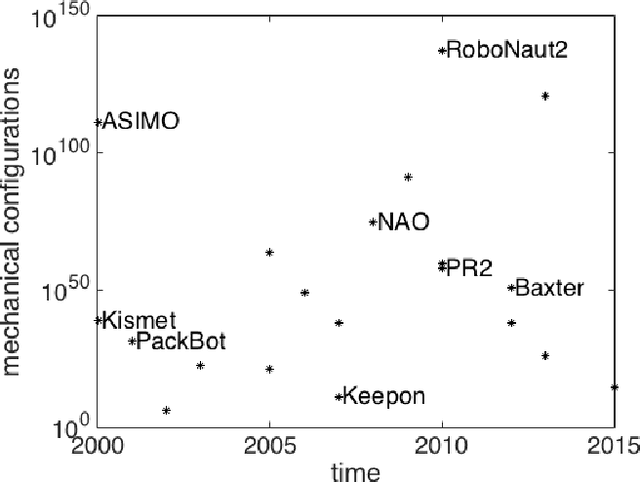

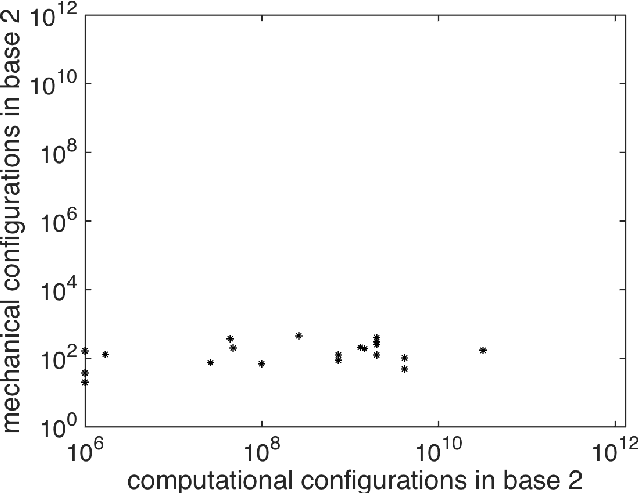

Abstract:This paper presents a principled way to think about articulated movement for artificial agents and a measurement of platforms that produce such movement. In particular, in human-facing scenarios, the shape evolution of robotic platforms will become essential in creating systems that integrate and communicate with human counterparts. This paper provides a tool to measure the expressive capacity or expressivity of articulated platforms. To do this, it points to the synergistic relationship between computation and mechanization. Importantly, this way of thinking gives an information theoretic basis for measuring and comparing robots of increasing complexity and capability. The paper will provide concrete examples of this measure in application to current robotic platforms. It will also provide a comparison between the computational and mechanical capabilities of robotic platforms and analyze order-of-magnitude trends over the last 15 years. Implications for future work made by the paper are to provide a method by which to quantify movement imitation, outline a way of thinking about designing expressive robotic systems, and contextualize the capabilities of current robotic systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge