An Embodied, Platform-invariant Architecture for Connecting High-level Spatial Commands to Platform Articulation

Paper and Code

Mar 31, 2019

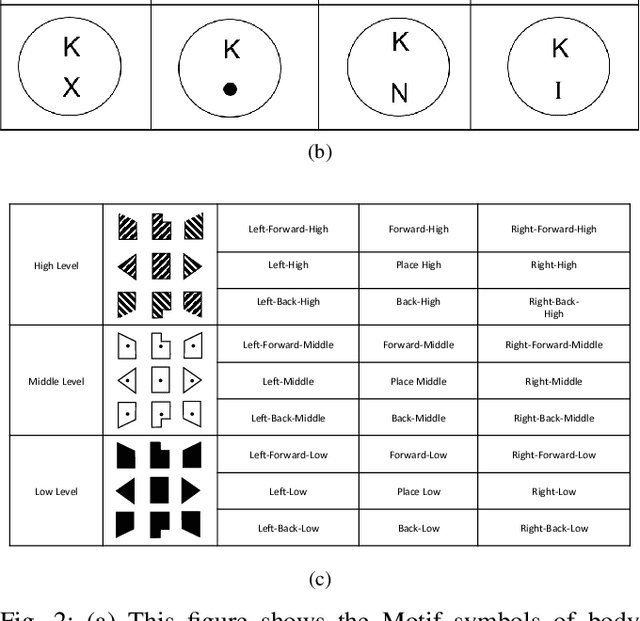

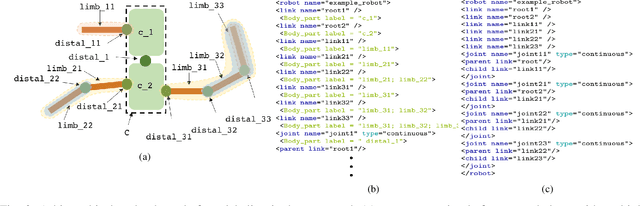

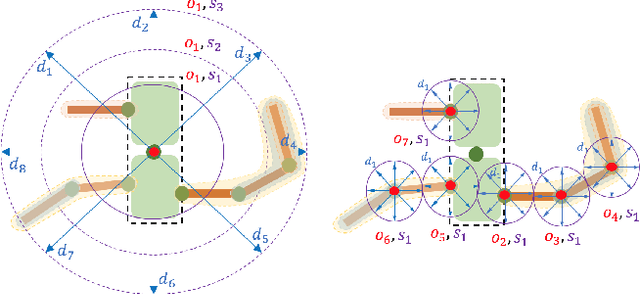

In contexts such as teleoperation, robot reprogramming, human-robot-interaction, and neural prosthetics, conveying movement commands to a robotic platform is often a limiting factor. Currently, many applications rely on joint-angle-by-joint-angle prescriptions. This inherently requires a large number of parameters to be specified by the user that scales with the number of degrees of freedom on a platform, creating high bandwidth requirements for interfaces. This paper presents an efficient representation of high-level, spatial commands that specifies many joint angles with relatively few parameters based on a spatial architecture that is judged favorably by human viewers. In particular, a general method for labeling connected platform linkages, generating a databank of user-specified poses, and mapping between high-level spatial commands and specific platform static configurations are presented. Thus, this architecture is ``platform-invariant'' where the same high-level, spatial command can be executed on any platform. This has the advantage that our commands have meaning for human movers as well. In order to achieve this, we draw inspiration from Laban/Bartenieff Movement Studies, an embodied taxonomy for movement description. The architecture is demonstrated through implementation on 26 spatial directions for a Rethink Robotics Baxter, an Aldebaran NAO, and a KUKA youBot. User studies are conducted to validate the claims of the proposed framework.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge