A. K. Malik

Intuitionistic Fuzzy Broad Learning System: Enhancing Robustness Against Noise and Outliers

Jul 15, 2023Abstract:In the realm of data classification, broad learning system (BLS) has proven to be a potent tool that utilizes a layer-by-layer feed-forward neural network. It consists of feature learning and enhancement segments, working together to extract intricate features from input data. The traditional BLS treats all samples as equally significant, which makes it less robust and less effective for real-world datasets with noises and outliers. To address this issue, we propose the fuzzy BLS (F-BLS) model, which assigns a fuzzy membership value to each training point to reduce the influence of noises and outliers. In assigning the membership value, the F-BLS model solely considers the distance from samples to the class center in the original feature space without incorporating the extent of non-belongingness to a class. We further propose a novel BLS based on intuitionistic fuzzy theory (IF-BLS). The proposed IF-BLS utilizes intuitionistic fuzzy numbers based on fuzzy membership and non-membership values to assign scores to training points in the high-dimensional feature space by using a kernel function. We evaluate the performance of proposed F-BLS and IF-BLS models on 44 UCI benchmark datasets across diverse domains. Furthermore, Gaussian noise is added to some UCI datasets to assess the robustness of the proposed F-BLS and IF-BLS models. Experimental results demonstrate superior generalization performance of the proposed F-BLS and IF-BLS models compared to baseline models, both with and without Gaussian noise. Additionally, we implement the proposed F-BLS and IF-BLS models on the Alzheimers Disease Neuroimaging Initiative (ADNI) dataset, and promising results showcase the models effectiveness in real-world applications. The proposed methods offer a promising solution to enhance the BLS frameworks ability to handle noise and outliers.

Graph Embedded Intuitionistic Fuzzy RVFL for Class Imbalance Learning

Jul 15, 2023

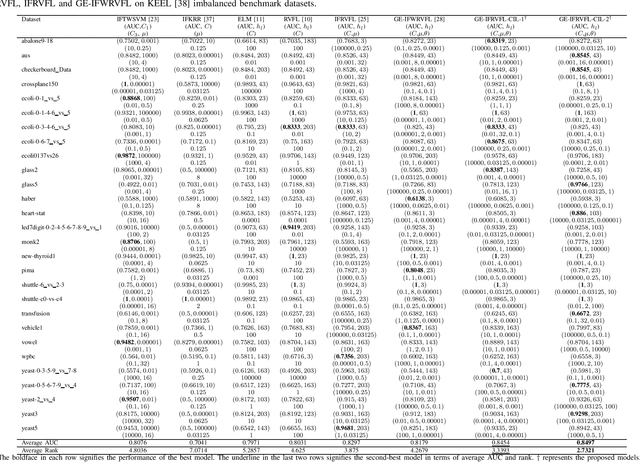

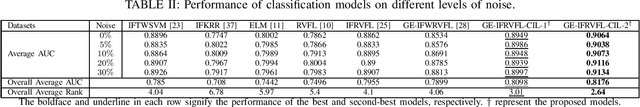

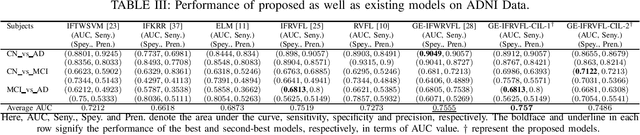

Abstract:The domain of machine learning is confronted with a crucial research area known as class imbalance learning, which presents considerable hurdles in the precise classification of minority classes. This issue can result in biased models where the majority class takes precedence in the training process, leading to the underrepresentation of the minority class. The random vector functional link (RVFL) network is a widely-used and effective learning model for classification due to its speed and efficiency. However, it suffers from low accuracy when dealing with imbalanced datasets. To overcome this limitation, we propose a novel graph embedded intuitionistic fuzzy RVFL for class imbalance learning (GE-IFRVFL-CIL) model incorporating a weighting mechanism to handle imbalanced datasets. The proposed GE-IFRVFL-CIL model has a plethora of benefits, such as $(i)$ it leverages graph embedding to extract semantically rich information from the dataset, $(ii)$ it uses intuitionistic fuzzy sets to handle uncertainty and imprecision in the data, $(iii)$ and the most important, it tackles class imbalance learning. The amalgamation of a weighting scheme, graph embedding, and intuitionistic fuzzy sets leads to the superior performance of the proposed model on various benchmark imbalanced datasets, including UCI and KEEL. Furthermore, we implement the proposed GE-IFRVFL-CIL on the ADNI dataset and achieved promising results, demonstrating the model's effectiveness in real-world applications. The proposed method provides a promising solution for handling class imbalance in machine learning and has the potential to be applied to other classification problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge