A. K. M. Najmul Islam

Notion of Explainable Artificial Intelligence -- An Empirical Investigation from A Users Perspective

Nov 01, 2023

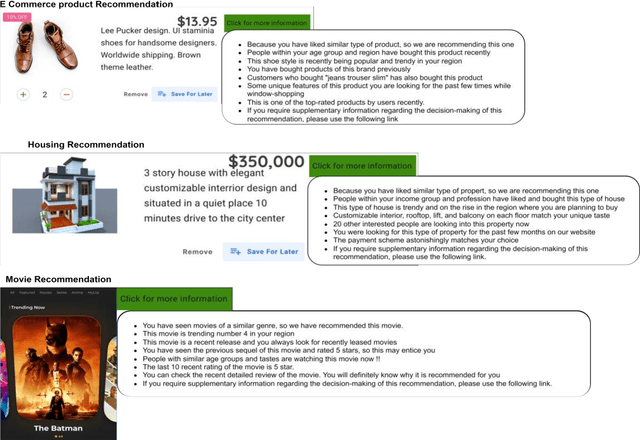

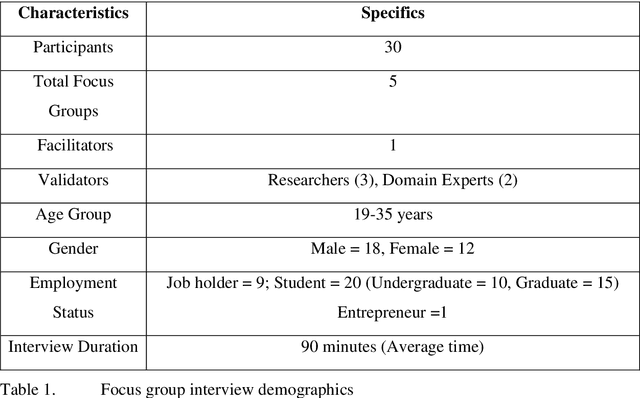

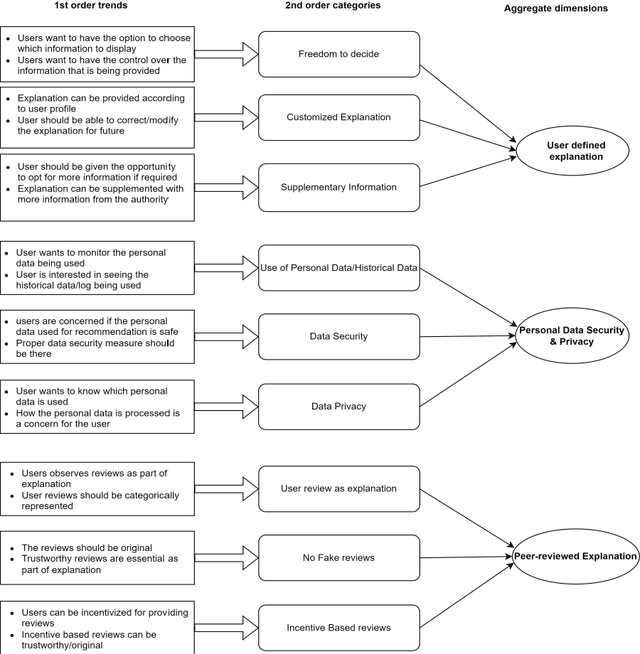

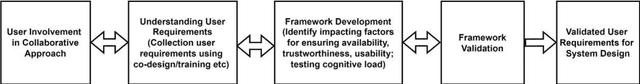

Abstract:The growing attention to artificial intelligence-based applications has led to research interest in explainability issues. This emerging research attention on explainable AI (XAI) advocates the need to investigate end user-centric explainable AI. Thus, this study aims to investigate usercentric explainable AI and considered recommendation systems as the study context. We conducted focus group interviews to collect qualitative data on the recommendation system. We asked participants about the end users' comprehension of a recommended item, its probable explanation, and their opinion of making a recommendation explainable. Our findings reveal that end users want a non-technical and tailor-made explanation with on-demand supplementary information. Moreover, we also observed users requiring an explanation about personal data usage, detailed user feedback, and authentic and reliable explanations. Finally, we propose a synthesized framework that aims at involving the end user in the development process for requirements collection and validation.

Explainable Artificial Intelligence (XAI) from a user perspective- A synthesis of prior literature and problematizing avenues for future research

Nov 24, 2022

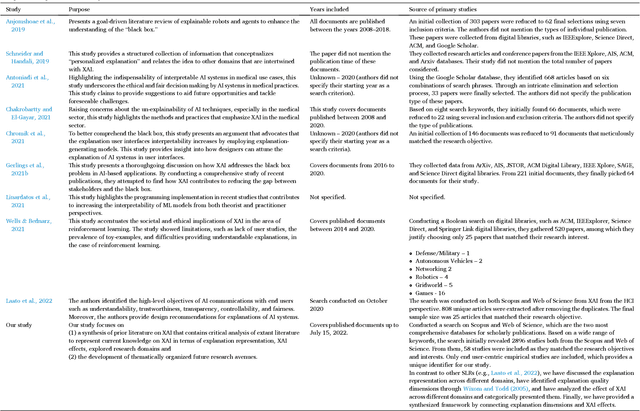

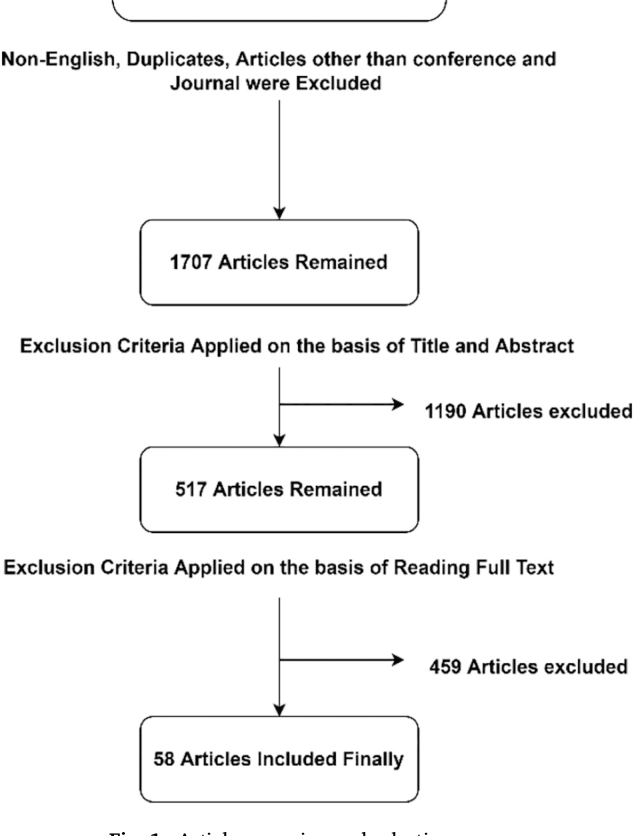

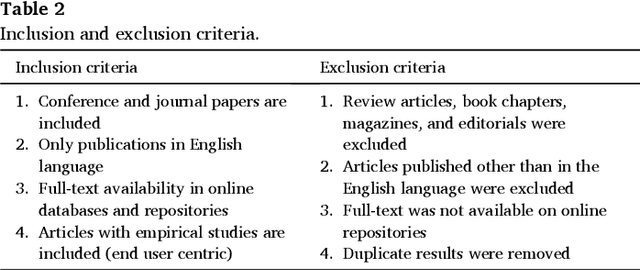

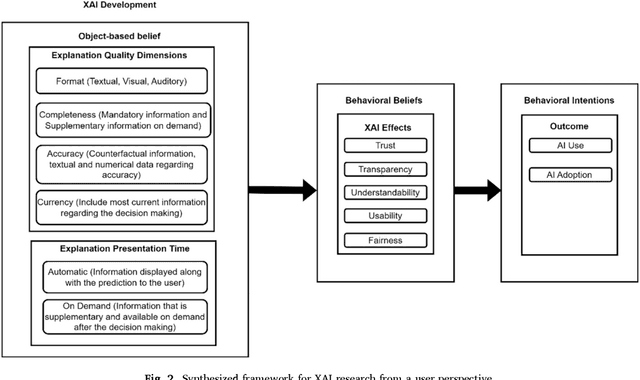

Abstract:The final search query for the Systematic Literature Review (SLR) was conducted on 15th July 2022. Initially, we extracted 1707 journal and conference articles from the Scopus and Web of Science databases. Inclusion and exclusion criteria were then applied, and 58 articles were selected for the SLR. The findings show four dimensions that shape the AI explanation, which are format (explanation representation format), completeness (explanation should contain all required information, including the supplementary information), accuracy (information regarding the accuracy of the explanation), and currency (explanation should contain recent information). Moreover, along with the automatic representation of the explanation, the users can request additional information if needed. We have also found five dimensions of XAI effects: trust, transparency, understandability, usability, and fairness. In addition, we investigated current knowledge from selected articles to problematize future research agendas as research questions along with possible research paths. Consequently, a comprehensive framework of XAI and its possible effects on user behavior has been developed.

A Survey on the Use of AI and ML for Fighting the COVID-19 Pandemic

Aug 03, 2020

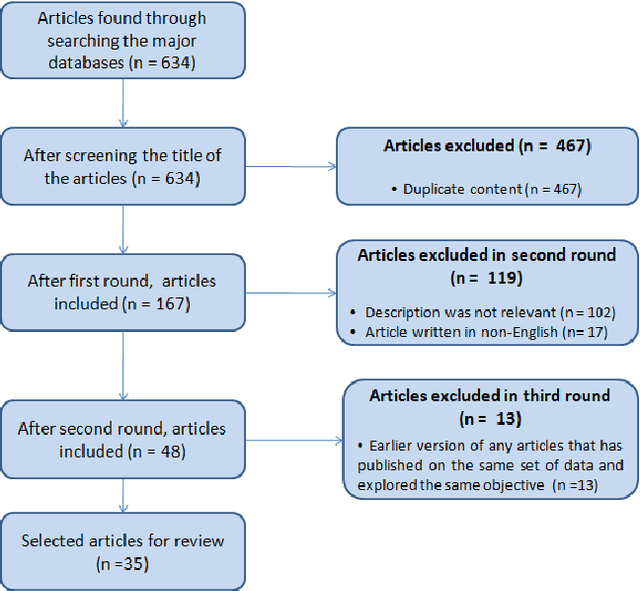

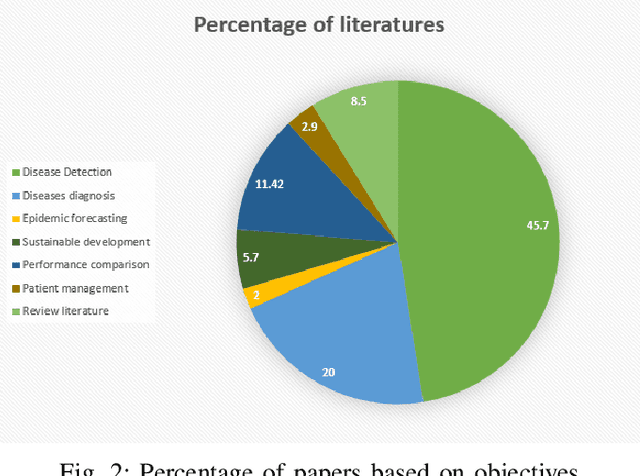

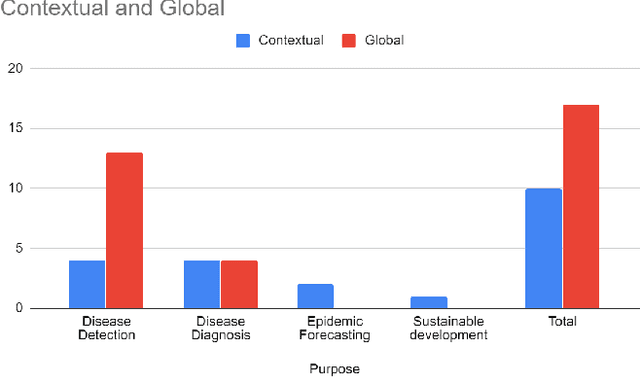

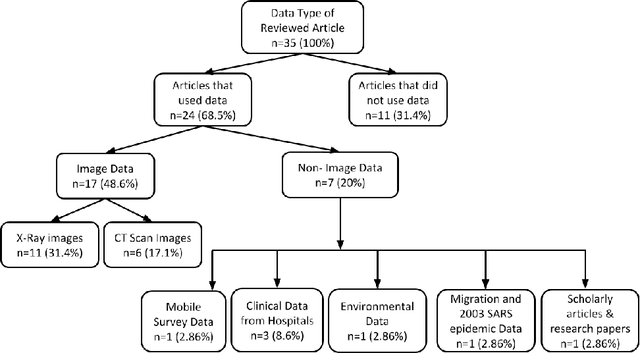

Abstract:Artificial intelligence (AI) and machine learning (ML) have made a paradigm shift in health care which, eventually can be used for decision support and forecasting by exploring the medical data. Recent studies showed that AI and ML can be used to fight against the COVID-19 pandemic. Therefore, the objective of this review study is to summarize the recent AI and ML based studies that have focused to fight against COVID-19 pandemic. From an initial set of 634 articles, a total of 35 articles were finally selected through an extensive inclusion-exclusion process. In our review, we have explored the objectives/aims of the existing studies (i.e., the role of AI/ML in fighting COVID-19 pandemic); context of the study (i.e., study focused to a specific country-context or with a global perspective); type and volume of dataset; methodology, algorithms or techniques adopted in the prediction or diagnosis processes; and mapping the algorithms/techniques with the data type highlighting their prediction/classification accuracy. We particularly focused on the uses of AI/ML in analyzing the pandemic data in order to depict the most recent progress of AI for fighting against COVID-19 and pointed out the potential scope of further research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge