Óscar Corcho

Vehicle Fuel Optimization Under Real-World Driving Conditions: An Explainable Artificial Intelligence Approach

Jul 22, 2021

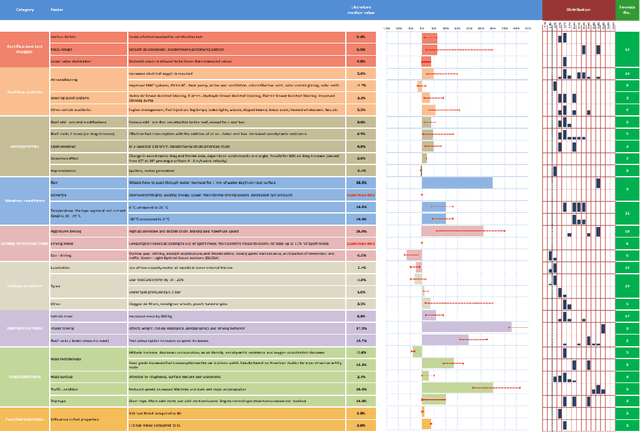

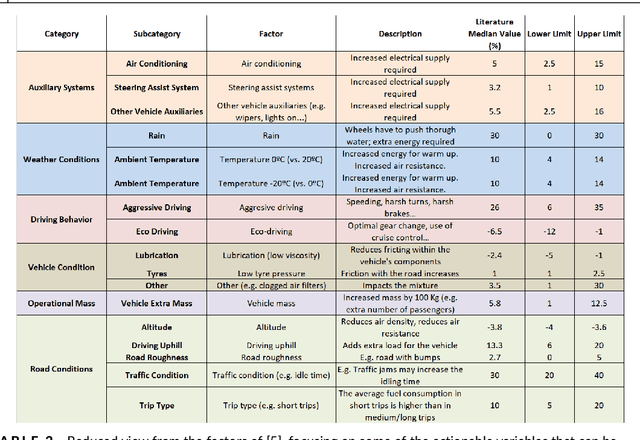

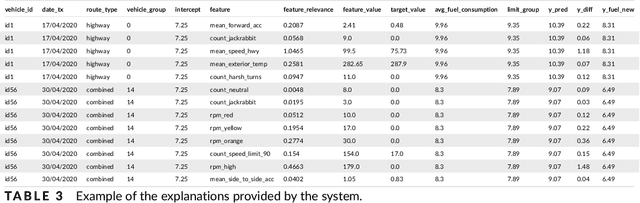

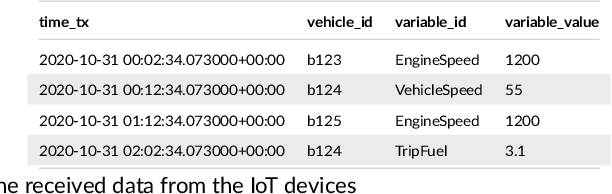

Abstract:Fuel optimization of diesel and petrol vehicles within industrial fleets is critical for mitigating costs and reducing emissions. This objective is achievable by acting on fuel-related factors, such as the driving behaviour style. In this study, we developed an Explainable Boosting Machine (EBM) model to predict fuel consumption of different types of industrial vehicles, using real-world data collected from 2020 to 2021. This Machine Learning model also explains the relationship between the input factors and fuel consumption, quantifying the individual contribution of each one of them. The explanations provided by the model are compared with domain knowledge in order to see if they are aligned. The results show that the 70% of the categories associated to the fuel-factors are similar to the previous literature. With the EBM algorithm, we estimate that optimizing driving behaviour decreases fuel consumption between 12% and 15% in a large fleet (more than 1000 vehicles).

Rule Extraction in Unsupervised Anomaly Detection for Model Explainability: Application to OneClass SVM

Nov 21, 2019

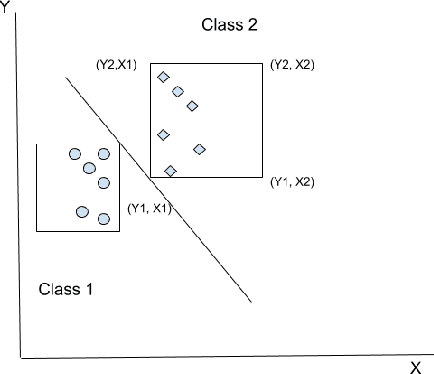

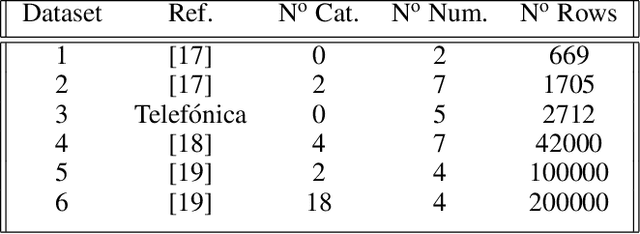

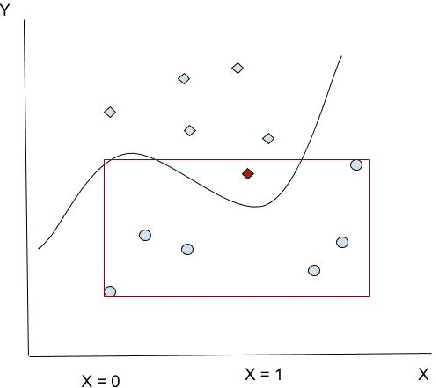

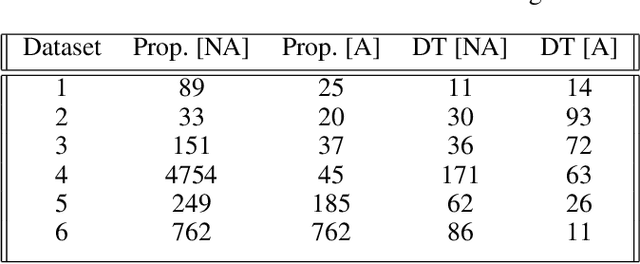

Abstract:OneClass SVM is a popular method for unsupervised anomaly detection. As many other methods, it suffers from the \textit{black box} problem: it is difficult to justify, in an intuitive and simple manner, why the decision frontier is identifying data points as anomalous or non anomalous. Such type of problem is being widely addressed for supervised models. However, it is still an uncharted area for unsupervised learning. In this paper, we describe a method to infer rules that justify why a point is labelled as an anomaly, so as to obtain intuitive explanations for models created using the OneClass SVM algorithm. We evaluate our proposal with different datasets, including real-world data coming from industry. With this, our proposal contributes to extend Explainable AI techniques to unsupervised machine learning models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge