Rule Extraction in Unsupervised Anomaly Detection for Model Explainability: Application to OneClass SVM

Paper and Code

Nov 21, 2019

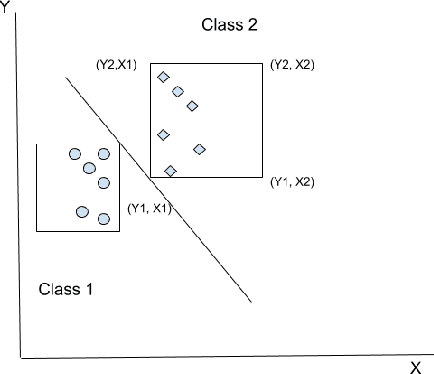

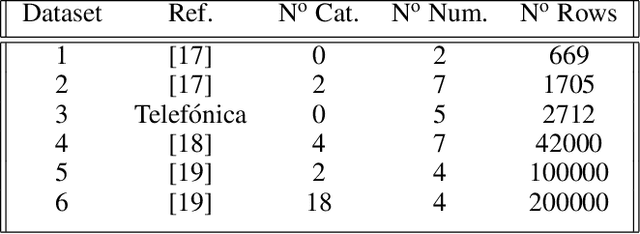

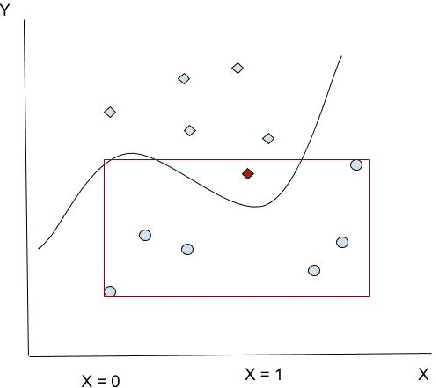

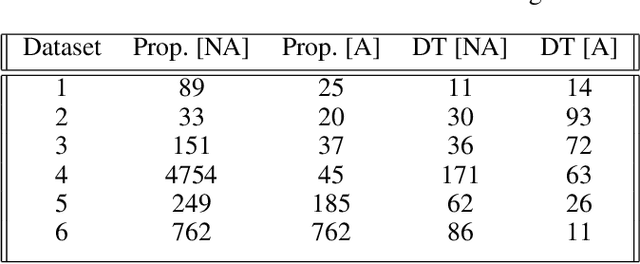

OneClass SVM is a popular method for unsupervised anomaly detection. As many other methods, it suffers from the \textit{black box} problem: it is difficult to justify, in an intuitive and simple manner, why the decision frontier is identifying data points as anomalous or non anomalous. Such type of problem is being widely addressed for supervised models. However, it is still an uncharted area for unsupervised learning. In this paper, we describe a method to infer rules that justify why a point is labelled as an anomaly, so as to obtain intuitive explanations for models created using the OneClass SVM algorithm. We evaluate our proposal with different datasets, including real-world data coming from industry. With this, our proposal contributes to extend Explainable AI techniques to unsupervised machine learning models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge