Éric Plourde

Single Channel Speech Enhancement Using U-Net Spiking Neural Networks

Jul 26, 2023Abstract:Speech enhancement (SE) is crucial for reliable communication devices or robust speech recognition systems. Although conventional artificial neural networks (ANN) have demonstrated remarkable performance in SE, they require significant computational power, along with high energy costs. In this paper, we propose a novel approach to SE using a spiking neural network (SNN) based on a U-Net architecture. SNNs are suitable for processing data with a temporal dimension, such as speech, and are known for their energy-efficient implementation on neuromorphic hardware. As such, SNNs are thus interesting candidates for real-time applications on devices with limited resources. The primary objective of the current work is to develop an SNN-based model with comparable performance to a state-of-the-art ANN model for SE. We train a deep SNN using surrogate-gradient-based optimization and evaluate its performance using perceptual objective tests under different signal-to-noise ratios and real-world noise conditions. Our results demonstrate that the proposed energy-efficient SNN model outperforms the Intel Neuromorphic Deep Noise Suppression Challenge (Intel N-DNS Challenge) baseline solution and achieves acceptable performance compared to an equivalent ANN model.

Evaluation of Neuromorphic Spike Encoding of Sound Using Information Theory

Feb 19, 2022

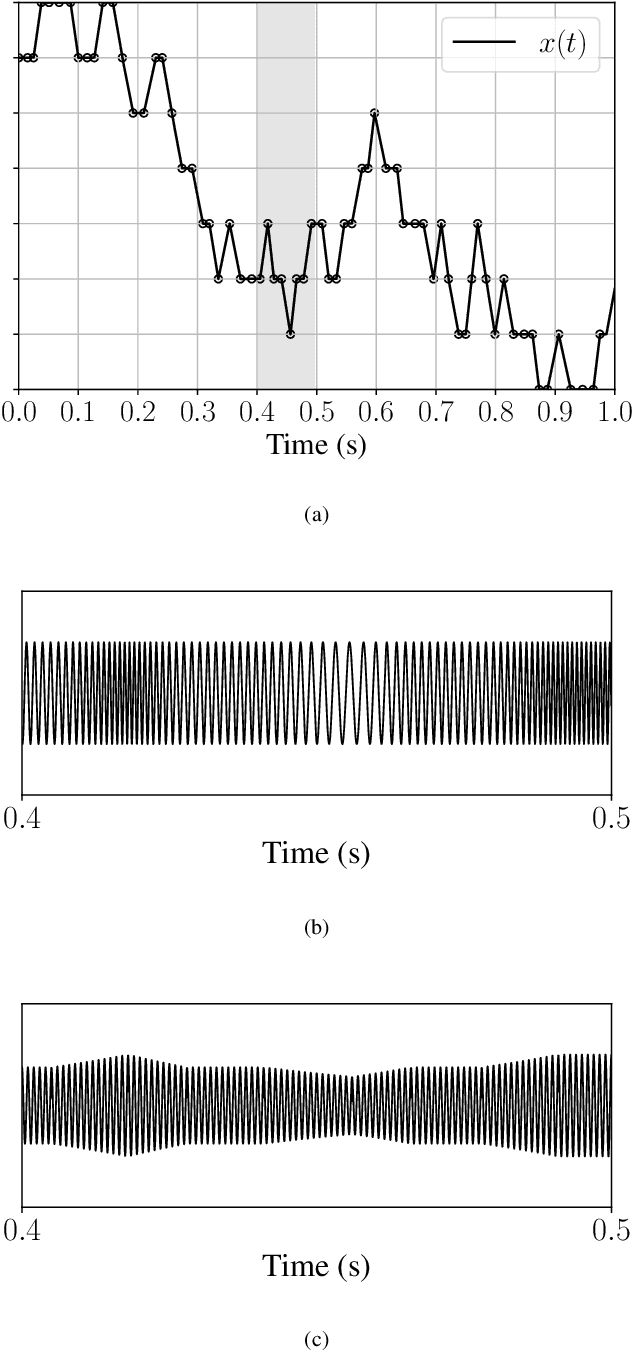

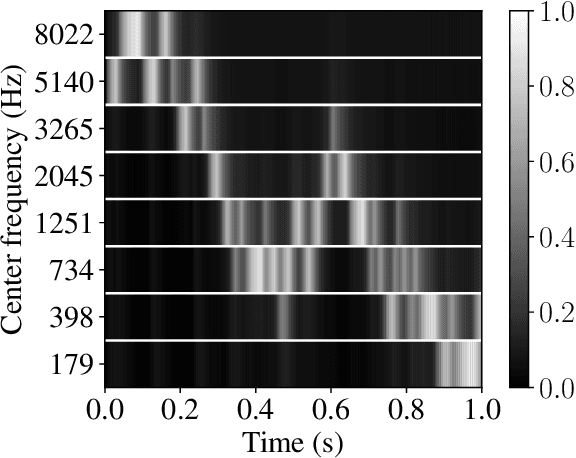

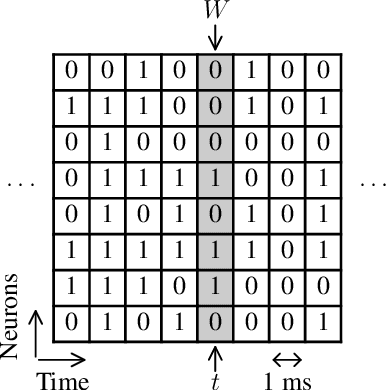

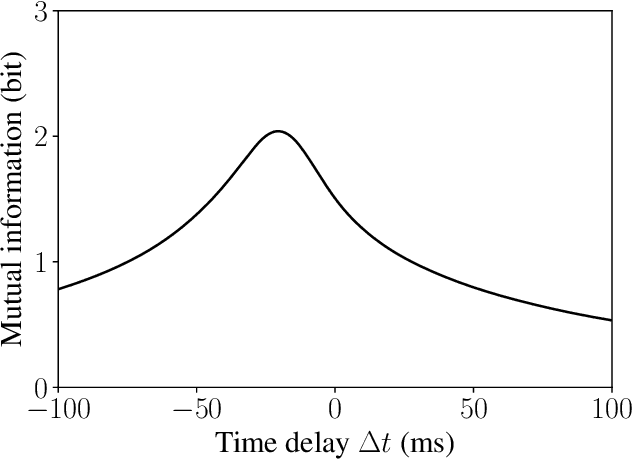

Abstract:The problem of spike encoding of sound consists in transforming a sound waveform into spikes. It is of interest in many domains, including the development of audio-based spiking neural networks, where it is the first and most crucial stage of processing. Many algorithms have been proposed to perform spike encoding of sound. However, a systematic approach to quantitatively evaluate their performance is currently lacking. We propose the use of an information-theoretic framework to solve this problem. Specifically, we evaluate the coding efficiency of four spike encoding algorithms on two coding tasks that consist of coding the fundamental characteristics of sound: frequency and amplitude. The algorithms investigated are: Independent Spike Coding, Send-on-Delta coding, Ben's Spiker Algorithm, and Leaky Integrate-and-Fire coding. Using the tools of information theory, we estimate the information that the spikes carry on relevant aspects of an input stimulus. We find disparities in the coding efficiencies of the algorithms, where Leaky Integrate-and-Fire coding performs best. The information-theoretic analysis of their performance on these coding tasks provides insight on the encoding of richer and more complex sound stimuli.

Adaptive Approach For Sparse Representations Using The Locally Competitive Algorithm For Audio

Sep 29, 2021

Abstract:Gammachirp filterbank has been used to approximate the cochlea in sparse coding algorithms. An oriented grid search optimization was applied to adapt the gammachirp's parameters and improve the Matching Pursuit (MP) algorithm's sparsity along with the reconstruction quality. However, this combination of a greedy algorithm with a grid search at each iteration is computationally demanding and not suitable for real-time applications. This paper presents an adaptive approach to optimize the gammachirp's parameters but in the context of the Locally Competitive Algorithm (LCA) that requires much fewer computations than MP. The proposed method consists of taking advantage of the LCA's neural architecture to automatically adapt the gammachirp's filterbank using the backpropagation algorithm. Results demonstrate an improvement in the LCA's performance with our approach in terms of sparsity, reconstruction quality, and convergence time. This approach can yield a significant advantage over existing approaches for real-time applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge