Zero Shot Learning on Simulated Robots

Paper and Code

Oct 04, 2019

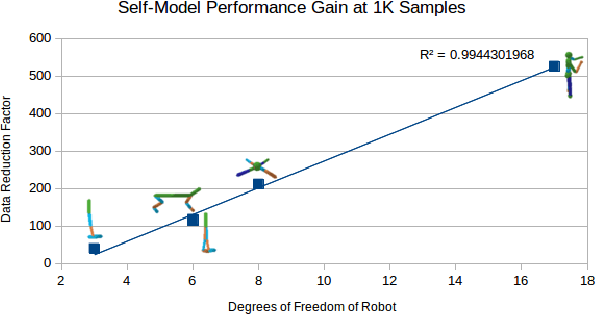

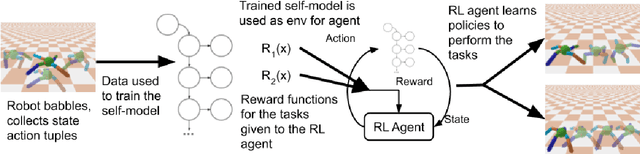

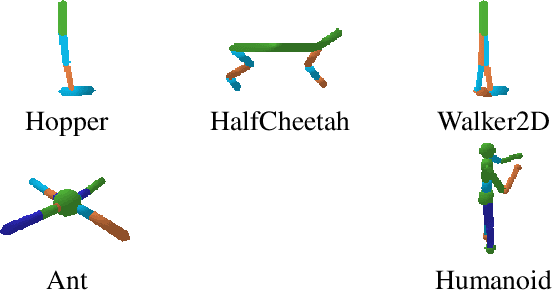

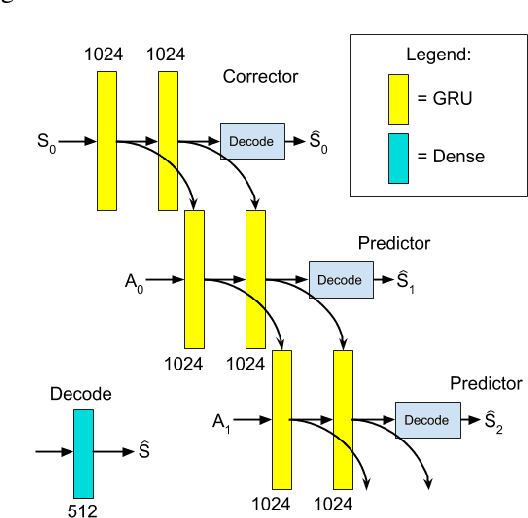

In this work we present a method for leveraging data from one source to learn how to do multiple new tasks. Task transfer is achieved using a self-model that encapsulates the dynamics of a system and serves as an environment for reinforcement learning. To study this approach, we train a self-models on various robot morphologies, using randomly sampled actions. Using a self-model, an initial state and corresponding actions, we can predict the next state. This predictive self-model is then used by a standard reinforcement learning algorithm to accomplish tasks without ever seeing a state from the "real" environment. These trained policies allow the robots to successfully achieve their goals in the "real" environment. We demonstrate that not only is training on the self-model far more data efficient than learning even a single task, but also that it allows for learning new tasks without necessitating any additional data collection, essentially allowing zero-shot learning of new tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge