Your diffusion model secretly knows the dimension of the data manifold

Paper and Code

Dec 23, 2022

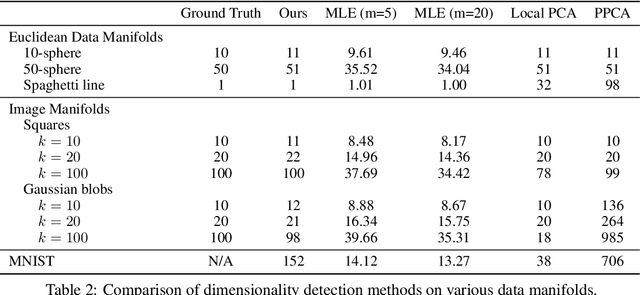

In this work, we propose a novel framework for estimating the dimension of the data manifold using a trained diffusion model. A trained diffusion model approximates the gradient of the log density of a noise-corrupted version of the target distribution for varying levels of corruption. If the data concentrates around a manifold embedded in the high-dimensional ambient space, then as the level of corruption decreases, the score function points towards the manifold, as this direction becomes the direction of maximum likelihood increase. Therefore, for small levels of corruption, the diffusion model provides us with access to an approximation of the normal bundle of the data manifold. This allows us to estimate the dimension of the tangent space, thus, the intrinsic dimension of the data manifold. Our method outperforms linear methods for dimensionality detection such as PPCA in controlled experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge