You Are Here: Geolocation by Embedding Maps and Images

Paper and Code

Nov 20, 2019

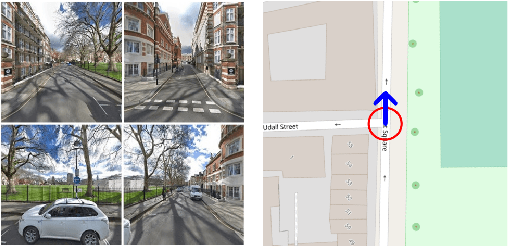

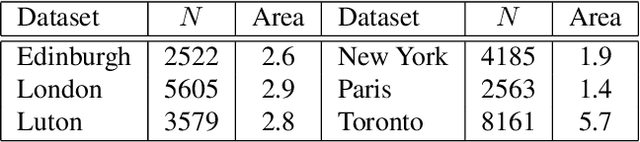

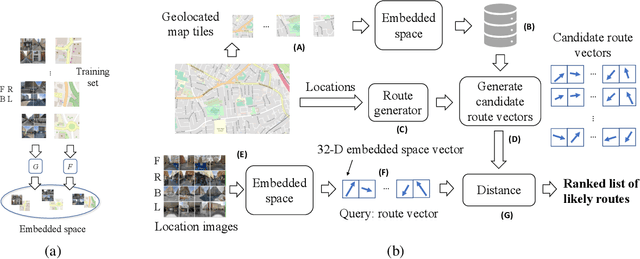

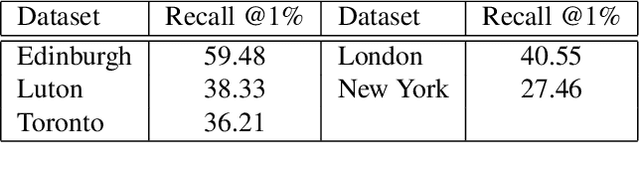

We present a novel approach to geolocating images on a 2-D map based on learning a low dimensional embedded space, which allows a comparison between an image captured at a location and local neighbourhoods of the map. The representation is not sufficiently discriminatory to allow localisation from a single image but when concatenated along a route, localisation converges quickly, with over 90% accuracy being achieved for routes up to 200m in length when using Google Street View and Open Street Map data. The approach generalises a previous fixed semantic feature based approach and achieves faster convergence and higher accuracy without the need for including turn information.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge