XFL: eXtreme Function Labeling

Paper and Code

Jul 28, 2021

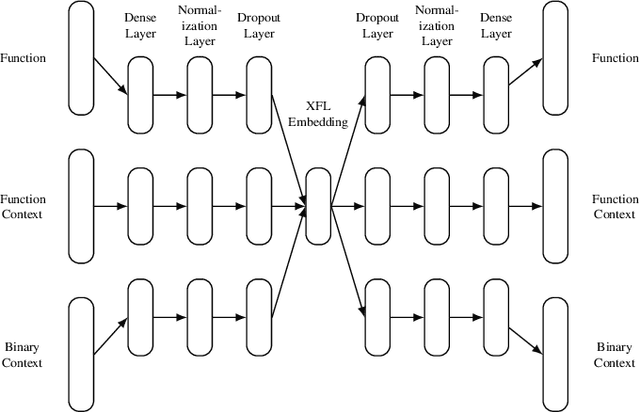

Reverse engineers would benefit from identifiers like function names, but these are usually unavailable in binaries. Training a machine learning model to predict function names automatically is promising but fundamentally hard due to the enormous number of classes. In this paper, we introduce eXtreme Function Labeling (XFL), an extreme multi-label learning approach to selecting appropriate labels for binary functions. XFL splits function names into tokens, treating each as an informative label akin to the problem of tagging texts in natural language. To capture the semantics of binary code, we introduce DEXTER, a novel function embedding that combines static analysis-based features with local context from the call graph and global context from the entire binary. We demonstrate that XFL outperforms state-of-the-art approaches to function labeling on a dataset of over 10,000 binaries from the Debian project, achieving a precision of 82.5%. We also study combinations of XFL with different published embeddings for binary functions and show that DEXTER consistently improves over the state of the art in information gain. As a result, we are able to show that binary function labeling is best phrased in terms of multi-label learning, and that binary function embeddings benefit from moving beyond just learning from syntax.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge