XEdgeAI: A Human-centered Industrial Inspection Framework with Data-centric Explainable Edge AI Approach

Paper and Code

Jul 16, 2024

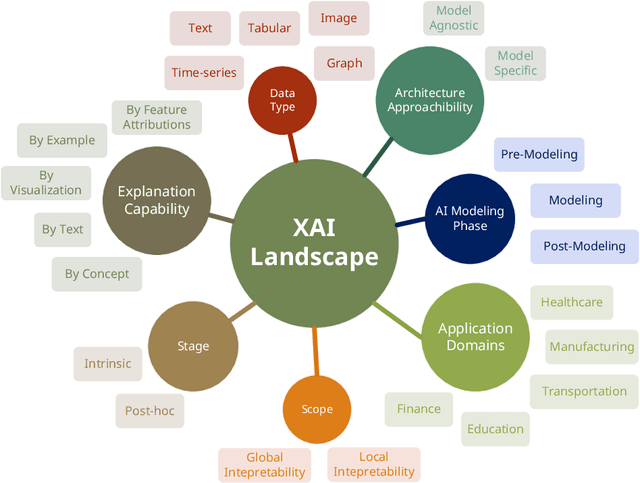

Recent advancements in deep learning have significantly improved visual quality inspection and predictive maintenance within industrial settings. However, deploying these technologies on low-resource edge devices poses substantial challenges due to their high computational demands and the inherent complexity of Explainable AI (XAI) methods. This paper addresses these challenges by introducing a novel XAI-integrated Visual Quality Inspection framework that optimizes the deployment of semantic segmentation models on low-resource edge devices. Our framework incorporates XAI and the Large Vision Language Model to deliver human-centered interpretability through visual and textual explanations to end-users. This is crucial for end-user trust and model interpretability. We outline a comprehensive methodology consisting of six fundamental modules: base model fine-tuning, XAI-based explanation generation, evaluation of XAI approaches, XAI-guided data augmentation, development of an edge-compatible model, and the generation of understandable visual and textual explanations. Through XAI-guided data augmentation, the enhanced model incorporating domain expert knowledge with visual and textual explanations is successfully deployed on mobile devices to support end-users in real-world scenarios. Experimental results showcase the effectiveness of the proposed framework, with the mobile model achieving competitive accuracy while significantly reducing model size. This approach paves the way for the broader adoption of reliable and interpretable AI tools in critical industrial applications, where decisions must be both rapid and justifiable.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge