Wider Vision: Enriching Convolutional Neural Networks via Alignment to External Knowledge Bases

Paper and Code

Feb 22, 2021

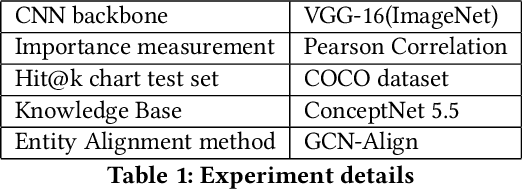

Deep learning models suffer from opaqueness. For Convolutional Neural Networks (CNNs), current research strategies for explaining models focus on the target classes within the associated training dataset. As a result, the understanding of hidden feature map activations is limited by the discriminative knowledge gleaned during training. The aim of our work is to explain and expand CNNs models via the mirroring or alignment of CNN to an external knowledge base. This will allow us to give a semantic context or label for each visual feature. We can match CNN feature activations to nodes in our external knowledge base. This supports knowledge-based interpretation of the features associated with model decisions. To demonstrate our approach, we build two separate graphs. We use an entity alignment method to align the feature nodes in a CNN with the nodes in a ConceptNet based knowledge graph. We then measure the proximity of CNN graph nodes to semantically meaningful knowledge base nodes. Our results show that in the aligned embedding space, nodes from the knowledge graph are close to the CNN feature nodes that have similar meanings, indicating that nodes from an external knowledge base can act as explanatory semantic references for features in the model. We analyse a variety of graph building methods in order to improve the results from our embedding space. We further demonstrate that by using hierarchical relationships from our external knowledge base, we can locate new unseen classes outside the CNN training set in our embeddings space, based on visual feature activations. This suggests that we can adapt our approach to identify unseen classes based on CNN feature activations. Our demonstrated approach of aligning a CNN with an external knowledge base paves the way to reason about and beyond the trained model, with future adaptations to explainable models and zero-shot learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge