Why can't memory networks read effectively?

Paper and Code

Oct 16, 2019

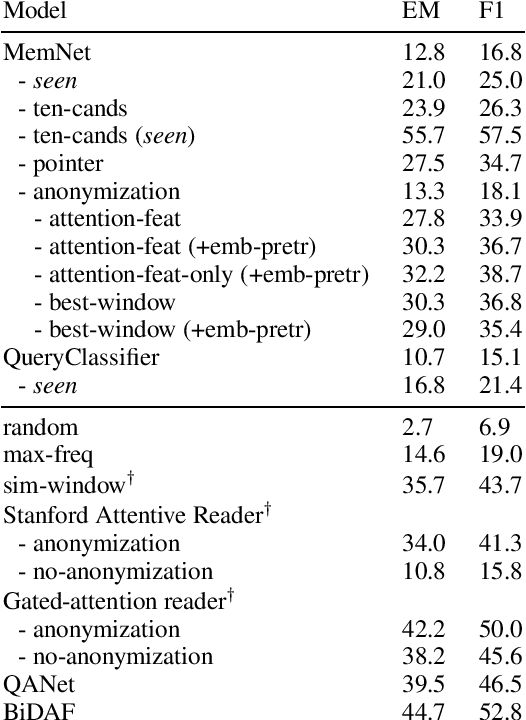

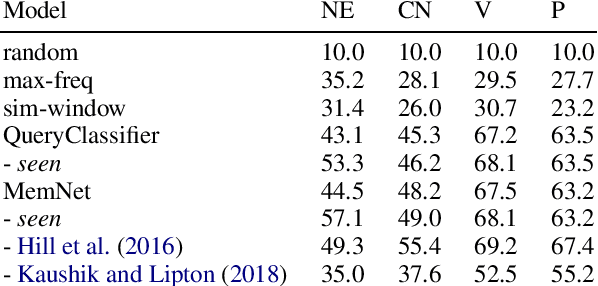

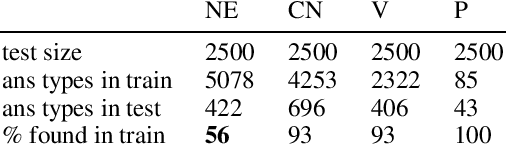

Memory networks have been a popular choice among neural architectures for machine reading comprehension and question answering. While recent work revealed that memory networks can't truly perform multi-hop reasoning, we show in the present paper that vanilla memory networks are ineffective even in single-hop reading comprehension. We analyze the reasons for this on two cloze-style datasets, one from the medical domain and another including children's fiction. We find that the output classification layer with entity-specific weights, and the aggregation of passage information with relatively flat attention distributions are the most important contributors to poor results. We propose network adaptations that can serve as simple remedies. We also find that the presence of unseen answers at test time can dramatically affect the reported results, so we suggest controlling for this factor during evaluation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge