Who is Undercover? Guiding LLMs to Explore Multi-Perspective Team Tactic in the Game

Paper and Code

Oct 20, 2024

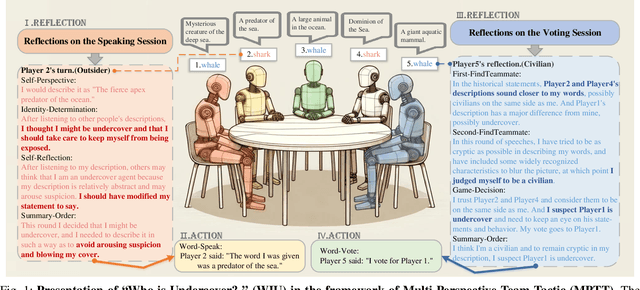

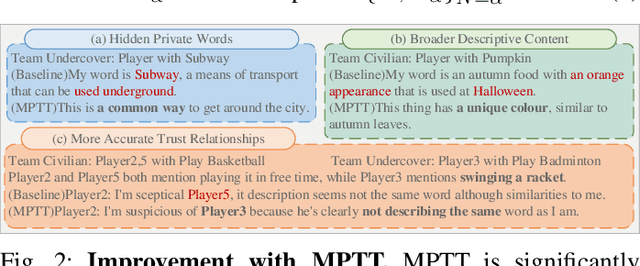

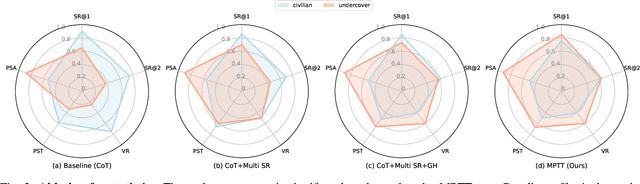

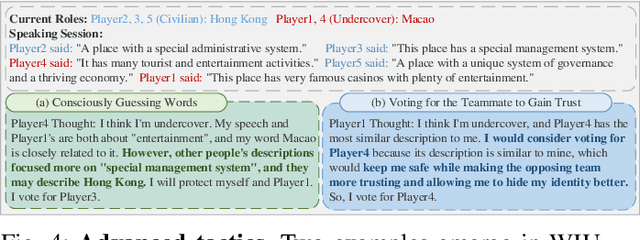

Large Language Models (LLMs) are pivotal AI agents in complex tasks but still face challenges in open decision-making problems within complex scenarios. To address this, we use the language logic game ``Who is Undercover?'' (WIU) as an experimental platform to propose the Multi-Perspective Team Tactic (MPTT) framework. MPTT aims to cultivate LLMs' human-like language expression logic, multi-dimensional thinking, and self-perception in complex scenarios. By alternating speaking and voting sessions, integrating techniques like self-perspective, identity-determination, self-reflection, self-summary and multi-round find-teammates, LLM agents make rational decisions through strategic concealment and communication, fostering human-like trust. Preliminary results show that MPTT, combined with WIU, leverages LLMs' cognitive capabilities to create a decision-making framework that can simulate real society. This framework aids minority groups in communication and expression, promoting fairness and diversity in decision-making. Additionally, our Human-in-the-loop experiments demonstrate that LLMs can learn and align with human behaviors through interactive, indicating their potential for active participation in societal decision-making.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge