Where to Look Next: Unsupervised Active Visual Exploration on 360° Input

Paper and Code

Sep 23, 2019

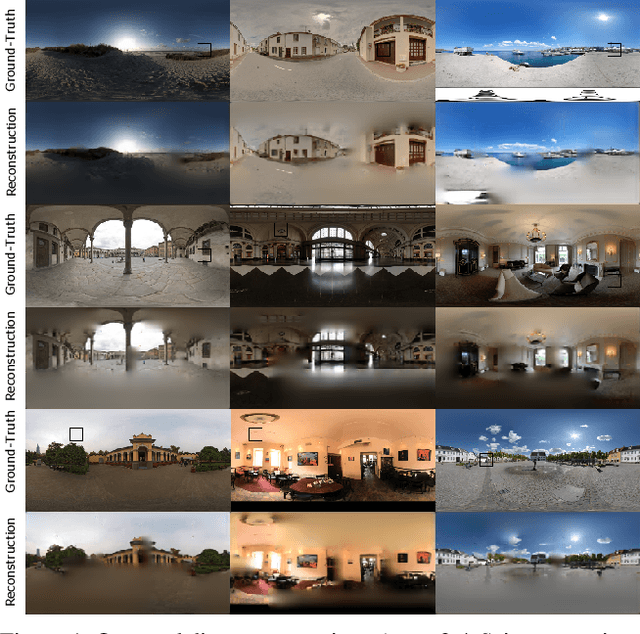

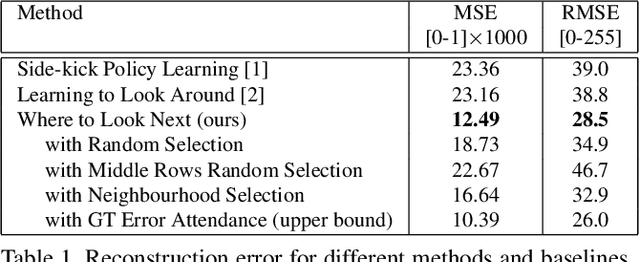

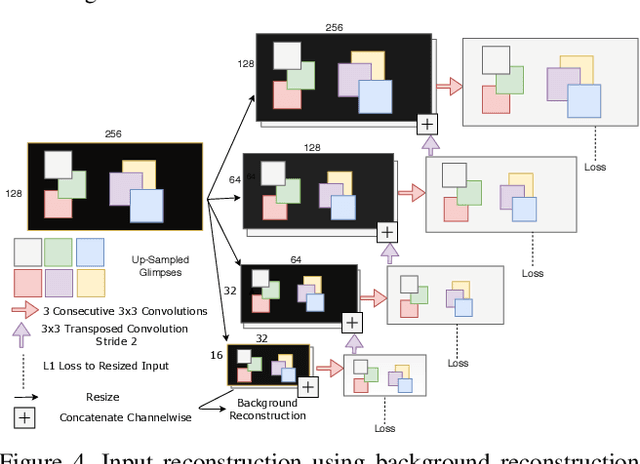

We address the problem of active visual exploration of large 360{\deg} inputs. In our setting an active agent with a limited camera bandwidth explores its 360{\deg} environment by changing its viewing direction at limited discrete time steps. As such, it observes the world as a sequence of narrow field-of-view 'glimpses', deciding for itself where to look next. Our proposed method exceeds previous works' performance by a significant margin without the need for deep reinforcement learning or training separate networks as sidekicks. A key component of our system are the spatial memory maps that make the system aware of the glimpses' orientations (locations in the 360{\deg} image). Further, we stress the advantages of retina-like glimpses when the agent's sensor bandwidth and time-steps are limited. Finally, we use our trained model to do classification of the whole scene using only the information observed in the glimpses.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge