Where's YOUR focus: Personalized Attention

Paper and Code

Feb 22, 2018

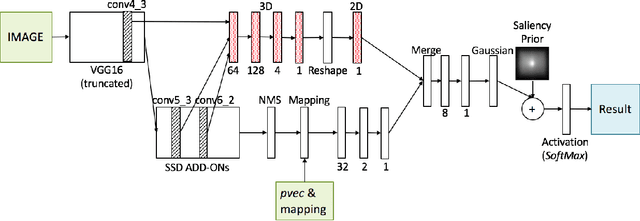

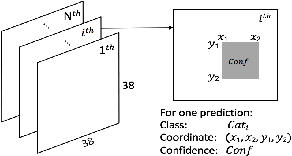

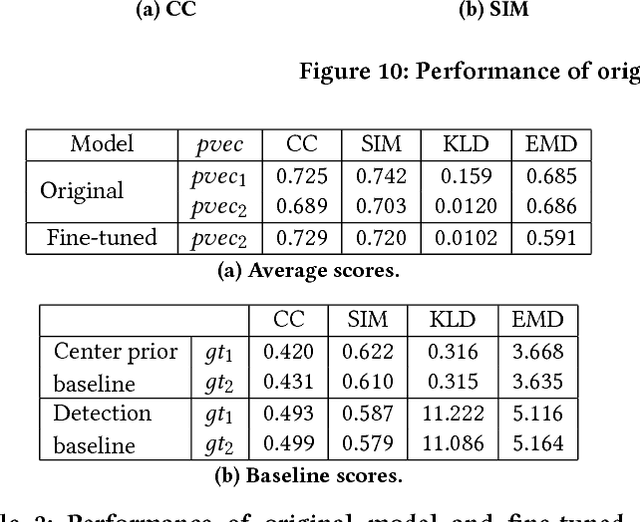

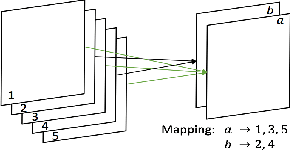

Human visual attention is subjective and biased according to the personal preference of the viewer, however, current works of saliency detection are general and objective, without counting the factor of the observer. This will make the attention prediction for a particular person not accurate enough. In this work, we present the novel idea of personalized attention prediction and develop Personalized Attention Network (PANet), a convolutional network that predicts saliency in images with personal preference. The model consists of two streams which share common feature extraction layers, and one stream is responsible for saliency prediction, while the other is adapted from the detection model and used to fit user preference. We automatically collect user preference from their albums and leaves them freedom to define what and how many categories their preferences are divided into. To train PANet, we dynamically generate ground truth saliency maps upon existing detection labels and saliency labels, and the generation parameters are based upon our collected datasets consists of 1k images. We evaluate the model with saliency prediction metrics and test the trained model on different preference vectors. The results have shown that our system is much better than general models in personalized saliency prediction and is efficient to use for different preferences.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge