Where Do Deep Fakes Look? Synthetic Face Detection via Gaze Tracking

Paper and Code

Jan 04, 2021

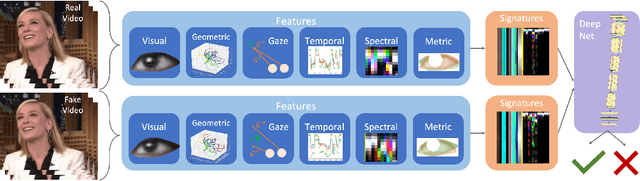

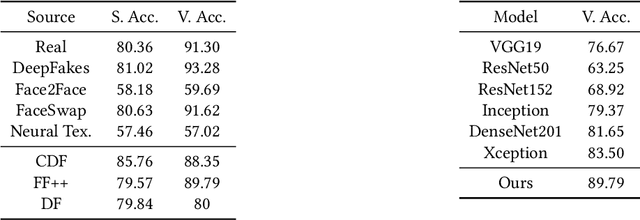

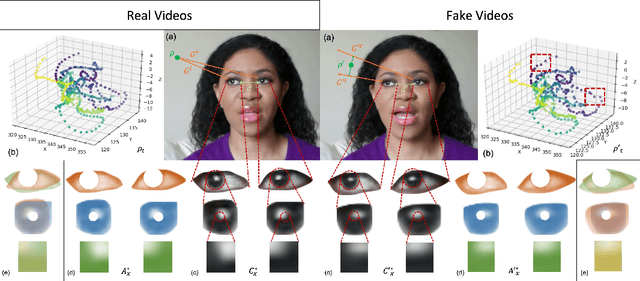

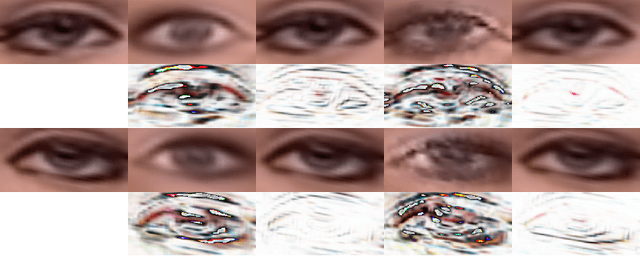

Following the recent initiatives for the democratization of AI, deep fake generators have become increasingly popular and accessible, causing dystopian scenarios towards social erosion of trust. A particular domain, such as biological signals, attracted attention towards detection methods that are capable of exploiting authenticity signatures in real videos that are not yet faked by generative approaches. In this paper, we first propose several prominent eye and gaze features that deep fakes exhibit differently. Second, we compile those features into signatures and analyze and compare those of real and fake videos, formulating geometric, visual, metric, temporal, and spectral variations. Third, we generalize this formulation to deep fake detection problem by a deep neural network, to classify any video in the wild as fake or real. We evaluate our approach on several deep fake datasets, achieving 89.79\% accuracy on FaceForensics++, 80.0\% on Deep Fakes (in the wild), and 88.35\% on CelebDF datasets. We conduct ablation studies involving different features, architectures, sequence durations, and post-processing artifacts. Our analysis concludes with 6.29\% improved accuracy over complex network architectures without the proposed gaze signatures.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge