When Is Generalizable Reinforcement Learning Tractable?

Paper and Code

Jan 01, 2021

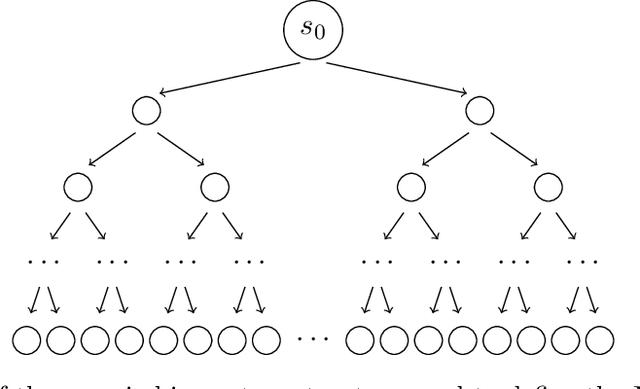

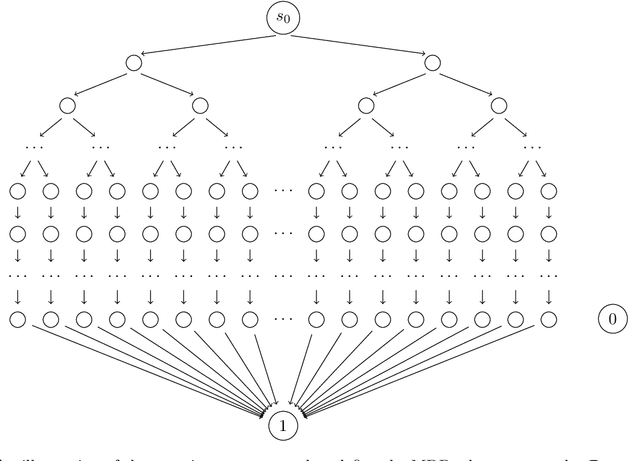

Agents trained by reinforcement learning (RL) often fail to generalize beyond the environment they were trained in, even when presented with new scenarios that seem very similar to the training environment. We study the query complexity required to train RL agents that can generalize to multiple environments. Intuitively, tractable generalization is only possible when the environments are similar or close in some sense. To capture this, we introduce Strong Proximity, a structural condition which precisely characterizes the relative closeness of different environments. We provide an algorithm which exploits Strong Proximity to provably and efficiently generalize. We also show that under a natural weakening of this condition, which we call Weak Proximity, RL can require query complexity that is exponential in the horizon to generalize. A key consequence of our theory is that even when the environments share optimal trajectories, and have highly similar reward and transition functions (as measured by classical metrics), tractable generalization is impossible.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge