What's Behind the Couch? Directed Ray Distance Functions for 3D Scene Reconstruction

Paper and Code

Dec 08, 2021

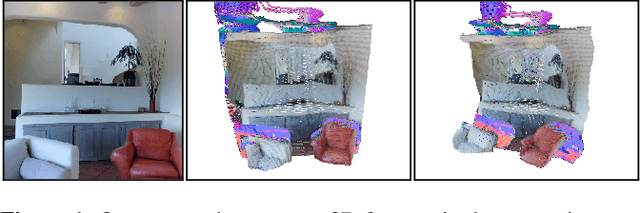

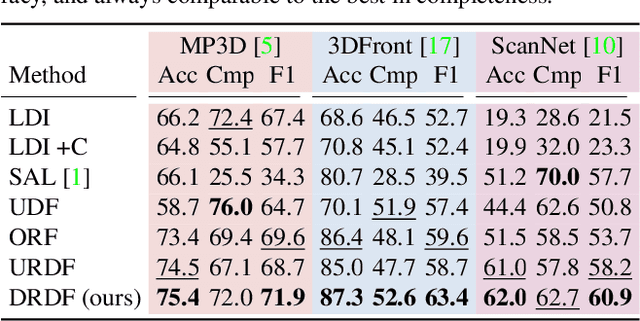

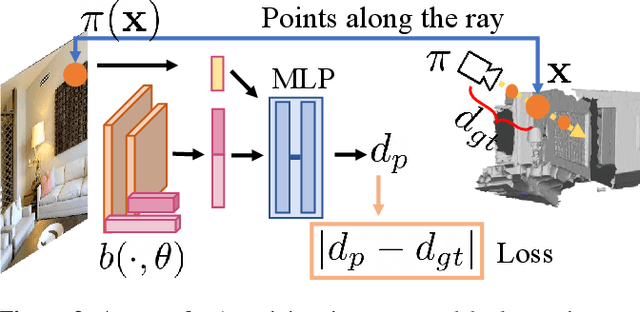

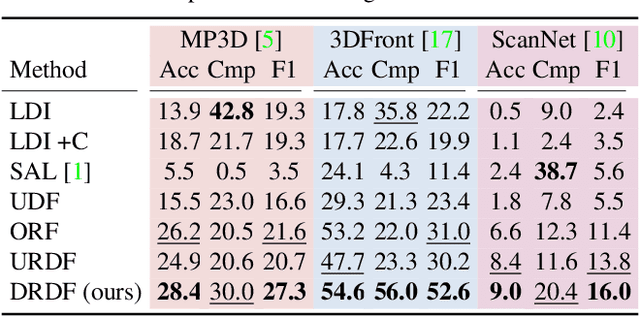

We present an approach for scene-level 3D reconstruction, including occluded regions, from an unseen RGB image. Our approach is trained on real 3D scans and images. This problem has proved difficult for multiple reasons; Real scans are not watertight, precluding many methods; distances in scenes require reasoning across objects (making it even harder); and, as we show, uncertainty about surface locations motivates networks to produce outputs that lack basic distance function properties. We propose a new distance-like function that can be computed on unstructured scans and has good behavior under uncertainty about surface location. Computing this function over rays reduces the complexity further. We train a deep network to predict this function and show it outperforms other methods on Matterport3D, 3D Front, and ScanNet.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge