Watermarking Language Models through Language Models

Paper and Code

Nov 07, 2024

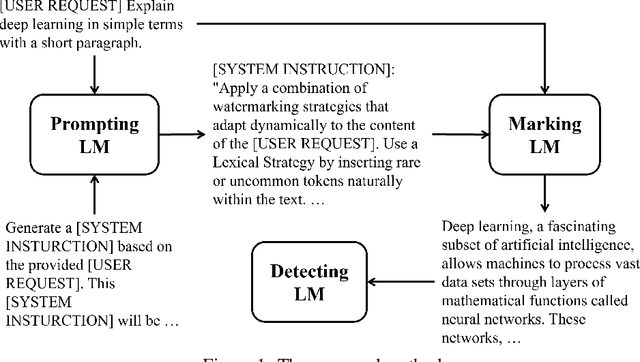

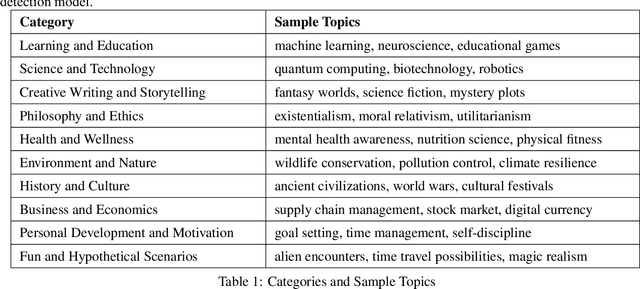

This paper presents a novel framework for watermarking language models through prompts generated by language models. The proposed approach utilizes a multi-model setup, incorporating a Prompting language model to generate watermarking instructions, a Marking language model to embed watermarks within generated content, and a Detecting language model to verify the presence of these watermarks. Experiments are conducted using ChatGPT and Mistral as the Prompting and Marking language models, with detection accuracy evaluated using a pretrained classifier model. Results demonstrate that the proposed framework achieves high classification accuracy across various configurations, with 95% accuracy for ChatGPT, 88.79% for Mistral. These findings validate the and adaptability of the proposed watermarking strategy across different language model architectures. Hence the proposed framework holds promise for applications in content attribution, copyright protection, and model authentication.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge