Vulnerability of Face Recognition Systems Against Composite Face Reconstruction Attack

Paper and Code

Aug 23, 2020

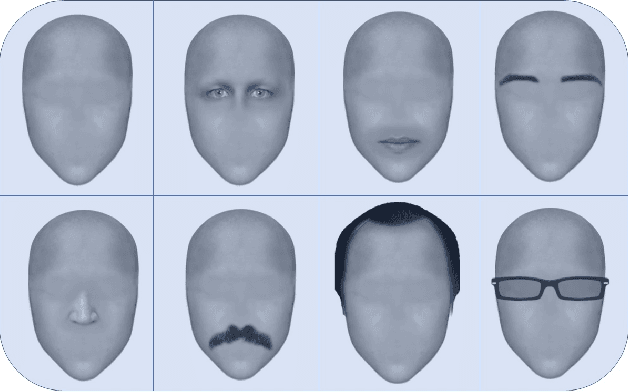

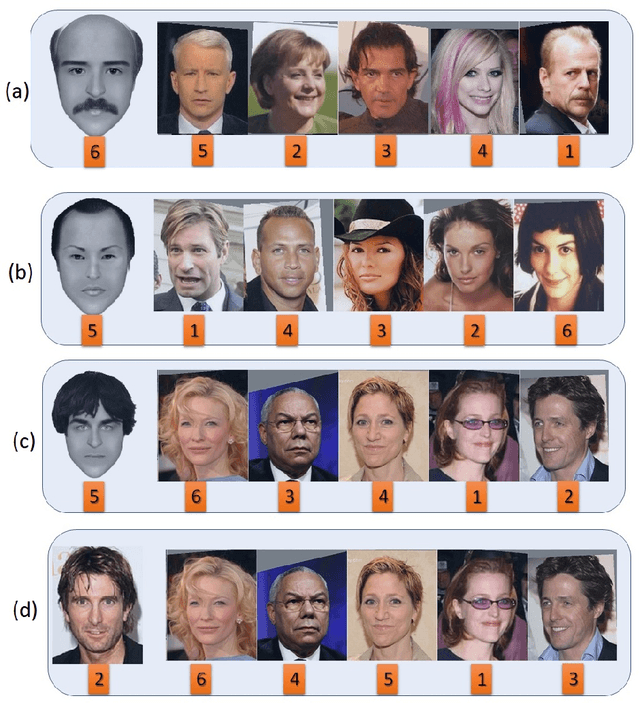

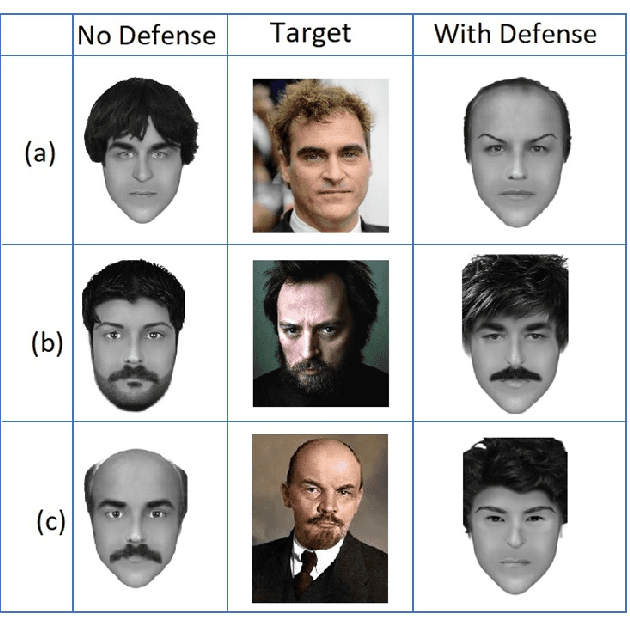

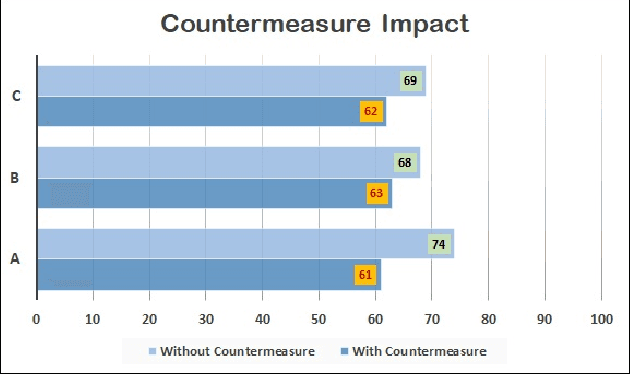

Rounding confidence score is considered trivial but a simple and effective countermeasure to stop gradient descent based image reconstruction attacks. However, its capability in the face of more sophisticated reconstruction attacks is an uninvestigated research area. In this paper, we prove that, the face reconstruction attacks based on composite faces can reveal the inefficiency of rounding policy as countermeasure. We assume that, the attacker takes advantage of face composite parts which helps the attacker to get access to the most important features of the face or decompose it to the independent segments. Afterwards, decomposed segments are exploited as search parameters to create a search path to reconstruct optimal face. Face composition parts enable the attacker to violate the privacy of face recognition models even with a blind search. However, we assume that, the attacker may take advantage of random search to reconstruct the target face faster. The algorithm is started with random composition of face parts as initial face and confidence score is considered as fitness value. Our experiments show that, since the rounding policy as countermeasure can't stop the random search process, current face recognition systems are extremely vulnerable against such sophisticated attacks. To address this problem, we successfully test Face Detection Score Filtering (FDSF) as a countermeasure to protect the privacy of training data against proposed attack.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge