Visual Story Generation Based on Emotion and Keywords

Paper and Code

Jan 07, 2023

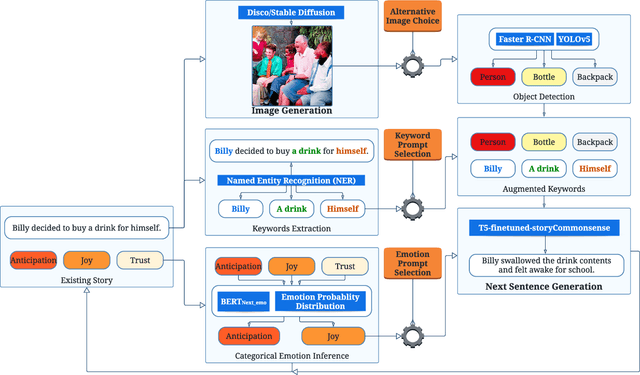

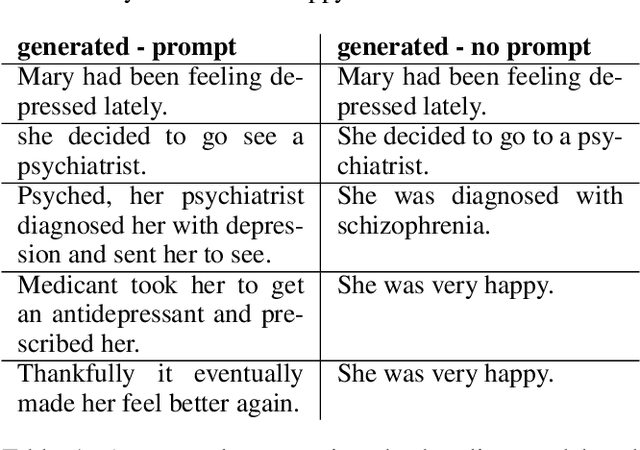

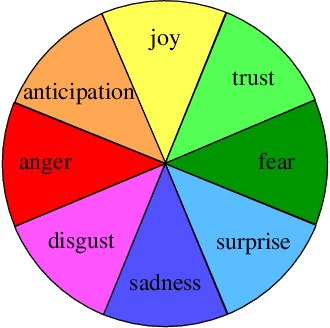

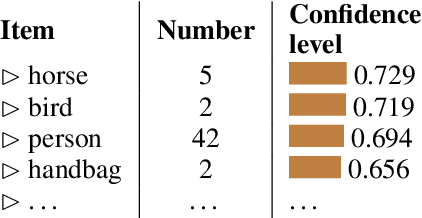

Automated visual story generation aims to produce stories with corresponding illustrations that exhibit coherence, progression, and adherence to characters' emotional development. This work proposes a story generation pipeline to co-create visual stories with the users. The pipeline allows the user to control events and emotions on the generated content. The pipeline includes two parts: narrative and image generation. For narrative generation, the system generates the next sentence using user-specified keywords and emotion labels. For image generation, diffusion models are used to create a visually appealing image corresponding to each generated sentence. Further, object recognition is applied to the generated images to allow objects in these images to be mentioned in future story development.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge