Visual speech recognition: aligning terminologies for better understanding

Paper and Code

Oct 03, 2017

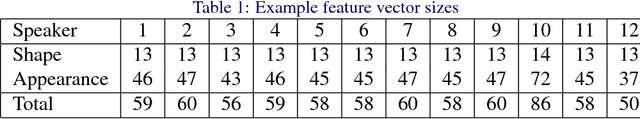

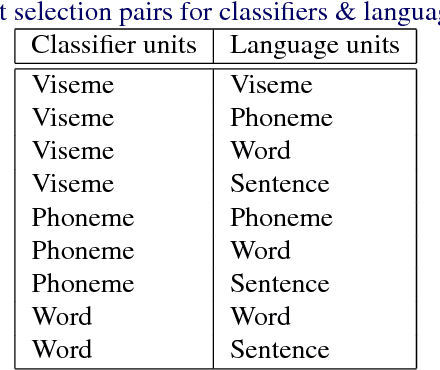

We are at an exciting time for machine lipreading. Traditional research stemmed from the adaptation of audio recognition systems. But now, the computer vision community is also participating. This joining of two previously disparate areas with different perspectives on computer lipreading is creating opportunities for collaborations, but in doing so the literature is experiencing challenges in knowledge sharing due to multiple uses of terms and phrases and the range of methods for scoring results. In particular we highlight three areas with the intention to improve communication between those researching lipreading; the effects of interchanging between speech reading and lipreading; speaker dependence across train, validation, and test splits; and the use of accuracy, correctness, errors, and varying units (phonemes, visemes, words, and sentences) to measure system performance. We make recommendations as to how we can be more consistent.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge