Video Analysis of "YouTube Funnies" to Aid the Study of Human Gait and Falls - Preliminary Results and Proof of Concept

Paper and Code

Oct 26, 2016

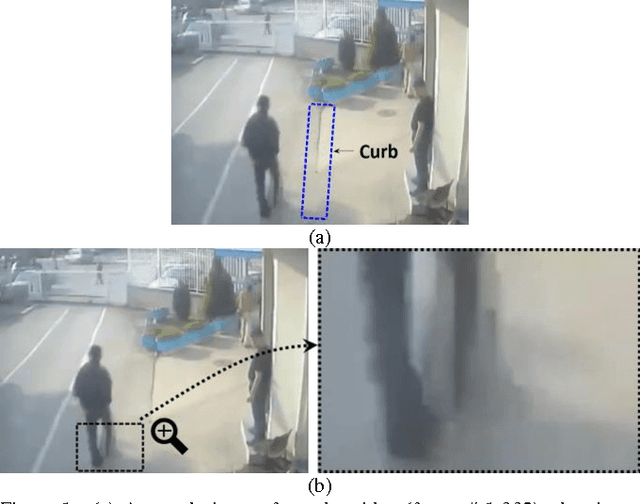

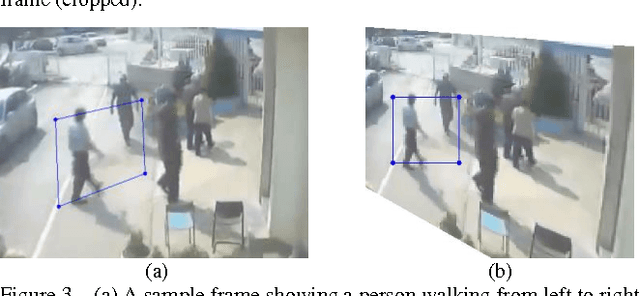

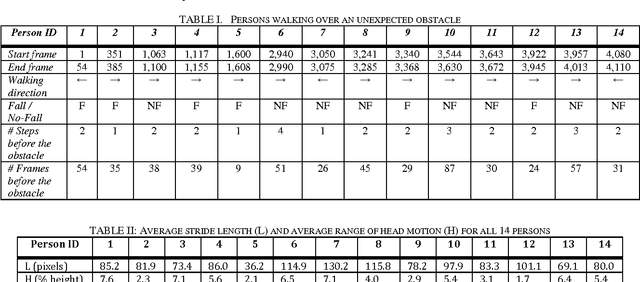

Because falls are funny, YouTube and other video sharing sites contain a large repository of real-life falls. We propose extracting gait and balance information from these videos to help us better understand some of the factors that contribute to falls. Proof-of-concept is explored in a single video containing multiple (n=14) falls/non-falls in the presence of an unexpected obstacle. The analysis explores: computing spatiotemporal parameters of gait in a video captured from an arbitrary viewpoint; the relationship between parameters of gait from the last few steps before the obstacle and falling vs. not falling; and the predictive capacity of a multivariate model in predicting a fall in the presence of an unexpected obstacle. Homography transformations correct the perspective projection distortion and allow for the consistent tracking of gait parameters as an individual walks in an arbitrary direction in the scene. A synthetic top view allows for computing the average stride length and a synthetic side view allows for measuring up and down motions of the head. In leave-one-out cross-validation, we were able to correctly predict whether a person would fall or not in 11 out of the 14 cases (78.6%), just by looking at the average stride length and the range of vertical head motion during the 1-4 most recent steps prior to reaching the obstacle.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge