Variational inference for neural network matrix factorization and its application to stochastic blockmodeling

Paper and Code

May 11, 2019

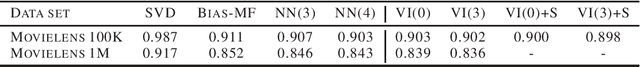

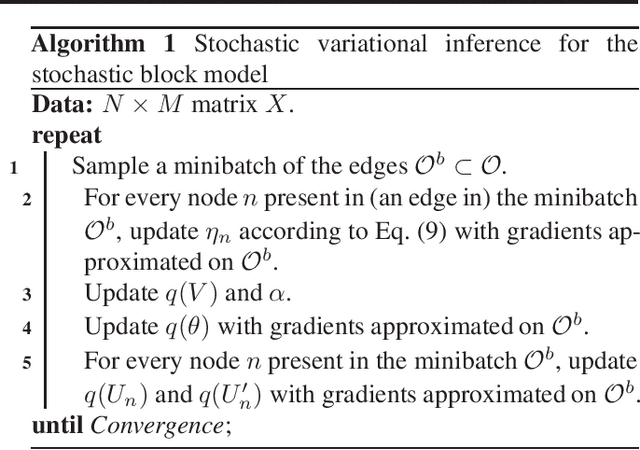

We consider the probabilistic analogue to neural network matrix factorization (Dziugaite & Roy, 2015), which we construct with Bayesian neural networks and fit with variational inference. We find that a linear model fit with variational inference can attain equivalent predictive performance to the neural network variants on the Movielens data sets. We discuss the implications of this result, which include some suggestions on the pros and cons of using the neural network construction, as well as the variational approach to inference. A probabilistic approach is required in some cases, however, such as when considering the important class of stochastic blockmodels. We describe a variational inference algorithm for a neural network matrix factorization model with nonparametric block structure and evaluate it on the NIPS co-authorship data set.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge