Variational Auto-Regressive Gaussian Processes for Continual Learning

Paper and Code

Jun 09, 2020

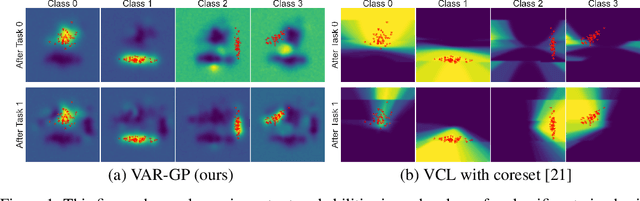

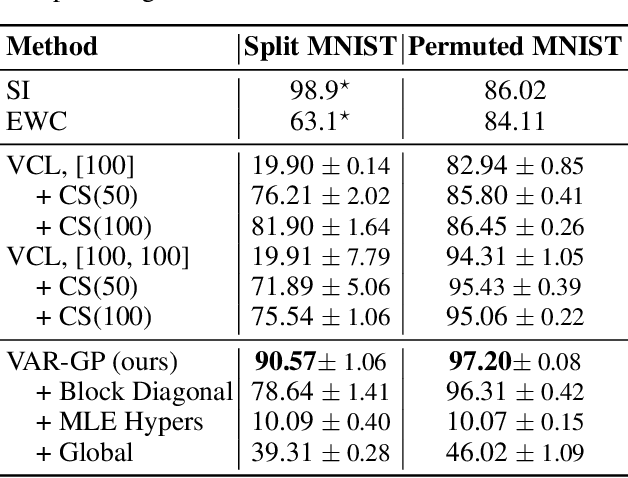

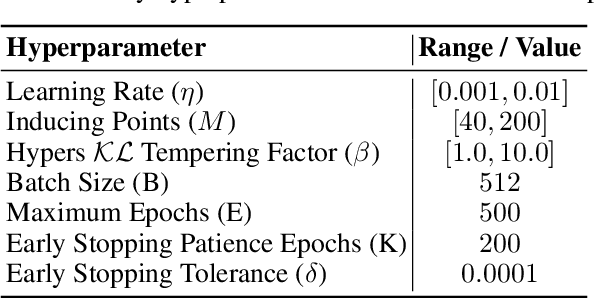

This paper proposes Variational Auto-Regressive Gaussian Process (VAR-GP), a principled Bayesian updating mechanism to incorporate new data for sequential tasks in the context of continual learning. It relies on a novel auto-regressive characterization of the variational distribution and inference is made scalable using sparse inducing point approximations. Experiments on standard continual learning benchmarks demonstrate the ability of VAR-GPs to perform well at new tasks without compromising performance on old ones, yielding competitive results to state-of-the-art methods. In addition, we qualitatively show how VAR-GP improves the predictive entropy estimates as we train on new tasks. Further, we conduct a thorough ablation study to verify the effectiveness of inferential choices.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge