VAE-KRnet and its applications to variational Bayes

Paper and Code

Jun 29, 2020

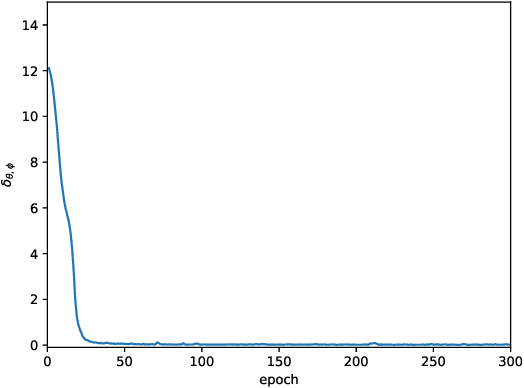

In this work, we have proposed a generative model for density estimation, called VAE-KRnet, which combines the canonical variational autoencoder (VAE) with our recently developed flow-based generative model, called KRnet. VAE is used as a dimension reduction technique to capture the latent space, and KRnet is used to model the distribution of the latent variables. Using a linear model between the data and the latent variables, we show that VAE-KRnet can be more effective and robust than the canonical VAE. As an application, we apply VAE-KRnet to variational Bayes to approximate the posterior. The variational Bayes approaches are usually based on the minimization of the Kullback-Leibler (KL) divergence between the model and the posterior, which often underestimates the variance if the model capability is not sufficiently strong. However, for high-dimensional distributions, it is very challenging to construct an accurate model since extra assumptions are often needed for efficiency, e.g., the mean-field approach assumes mutual independence between dimensions. When the number of dimensions is relatively small, KRnet can be used to approximate the posterior effectively with respect to the original random variable. For high-dimensional cases, we consider VAE-KRnet to incorporate with the dimension reduction. To alleviate the underestimation of the variance, we include the maximization of the mutual information between the latent random variable and the original one when seeking an approximate distribution with respect to the KL divergence. Numerical experiments have been presented to demonstrate the effectiveness of our model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge