Vaccinating Federated Learning for Robust Modulation Classification in Distributed Wireless Networks

Paper and Code

Oct 16, 2024

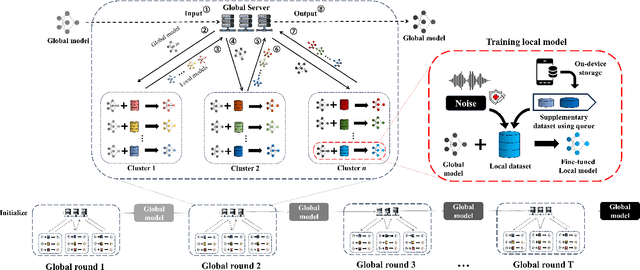

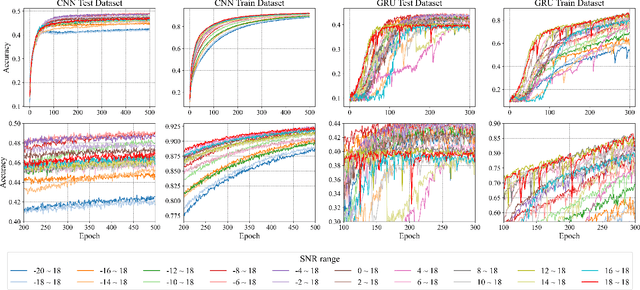

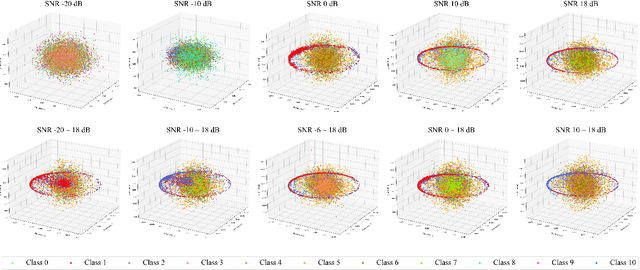

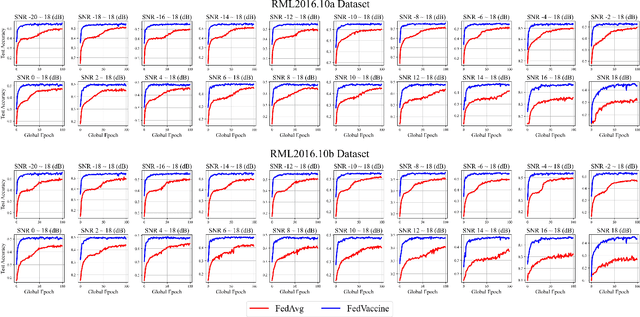

Automatic modulation classification (AMC) serves a vital role in ensuring efficient and reliable communication services within distributed wireless networks. Recent developments have seen a surge in interest in deep neural network (DNN)-based AMC models, with Federated Learning (FL) emerging as a promising framework. Despite these advancements, the presence of various noises within the signal exerts significant challenges while optimizing models to capture salient features. Furthermore, existing FL-based AMC models commonly rely on linear aggregation strategies, which face notable difficulties in integrating locally fine-tuned parameters within practical non-IID (Independent and Identically Distributed) environments, thereby hindering optimal learning convergence. To address these challenges, we propose FedVaccine, a novel FL model aimed at improving generalizability across signals with varying noise levels by deliberately introducing a balanced level of noise. This is accomplished through our proposed harmonic noise resilience approach, which identifies an optimal noise tolerance for DNN models, thereby regulating the training process and mitigating overfitting. Additionally, FedVaccine overcomes the limitations of existing FL-based AMC models' linear aggregation by employing a split-learning strategy using structural clustering topology and local queue data structure, enabling adaptive and cumulative updates to local models. Our experimental results, including IID and non-IID datasets as well as ablation studies, confirm FedVaccine's robust performance and superiority over existing FL-based AMC approaches across different noise levels. These findings highlight FedVaccine's potential to enhance the reliability and performance of AMC systems in practical wireless network environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge