Utility Fairness in Contextual Dynamic Pricing with Demand Learning

Paper and Code

Nov 28, 2023

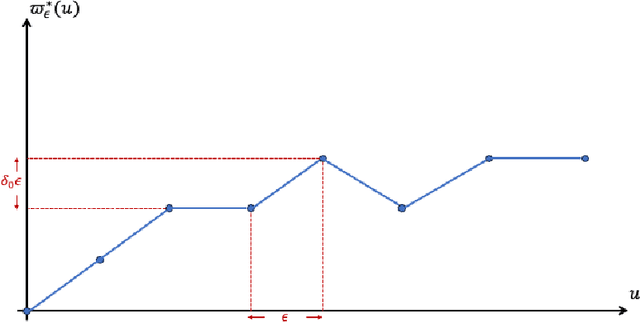

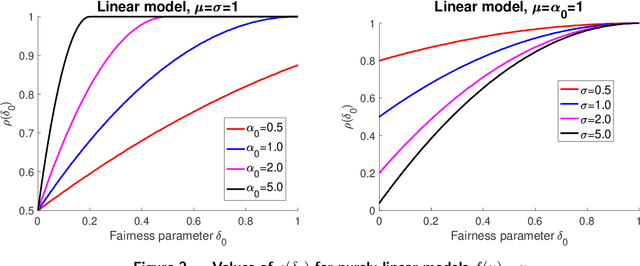

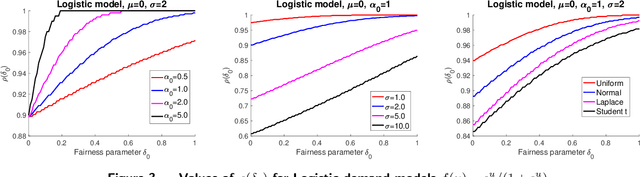

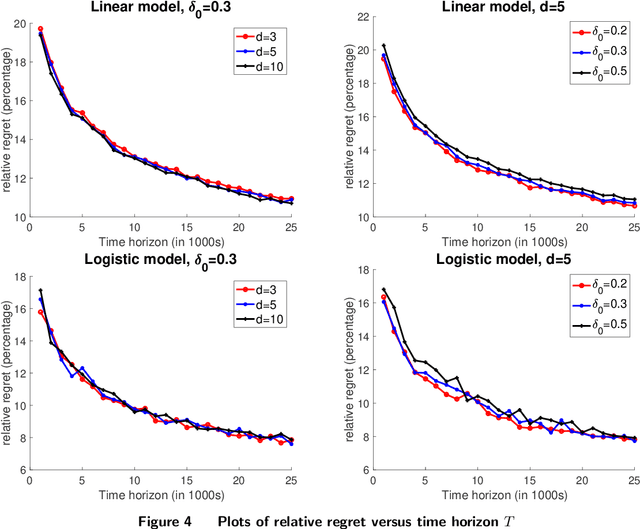

This paper introduces a novel contextual bandit algorithm for personalized pricing under utility fairness constraints in scenarios with uncertain demand, achieving an optimal regret upper bound. Our approach, which incorporates dynamic pricing and demand learning, addresses the critical challenge of fairness in pricing strategies. We first delve into the static full-information setting to formulate an optimal pricing policy as a constrained optimization problem. Here, we propose an approximation algorithm for efficiently and approximately computing the ideal policy. We also use mathematical analysis and computational studies to characterize the structures of optimal contextual pricing policies subject to fairness constraints, deriving simplified policies which lays the foundations of more in-depth research and extensions. Further, we extend our study to dynamic pricing problems with demand learning, establishing a non-standard regret lower bound that highlights the complexity added by fairness constraints. Our research offers a comprehensive analysis of the cost of fairness and its impact on the balance between utility and revenue maximization. This work represents a step towards integrating ethical considerations into algorithmic efficiency in data-driven dynamic pricing.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge