Using Counterfactual Reasoning and Reinforcement Learning for Decision-Making in Autonomous Driving

Paper and Code

Mar 20, 2020

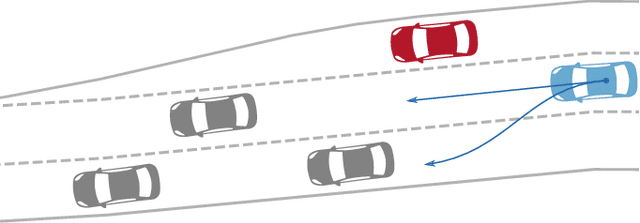

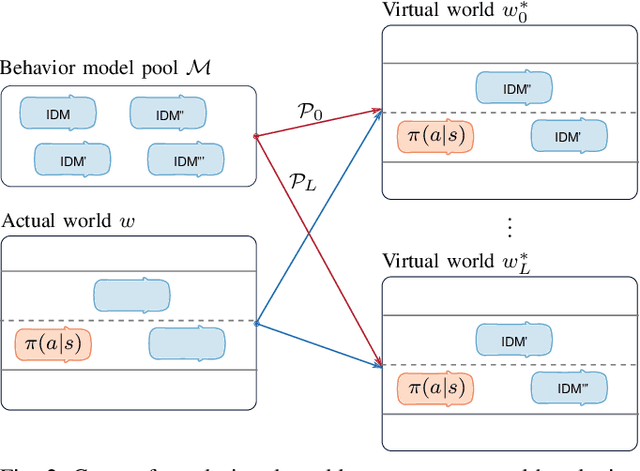

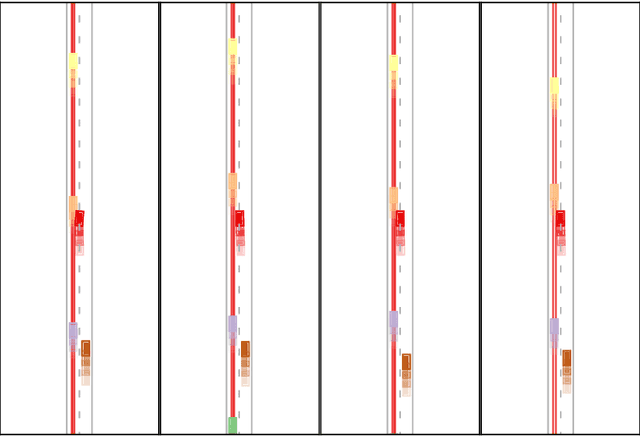

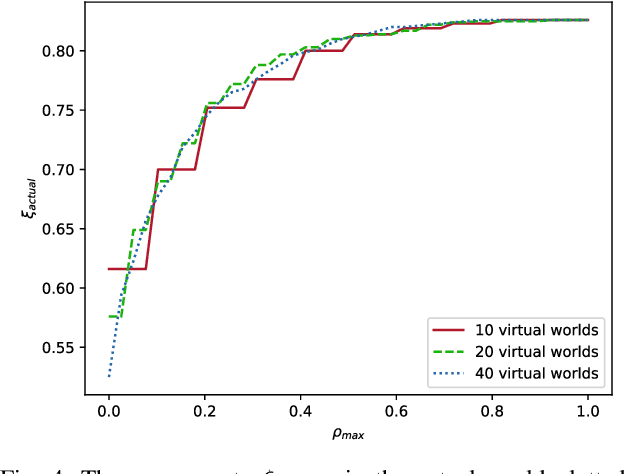

In decision-making for autonomous vehicles, we need to predict other vehicle's behaviors or learn their behavior implicitly using machine learning. However, often the predictions and learned models have errors or might be wrong altogether which can lead to dangerous situations. Therefore, decision-making algorithms should consider counterfactual reasoning such as: what would happen if the other agents will behave in a certain way? The approach we present in this paper is two-fold: First, during training, we randomly select behavior models from a behavior model pool and assign them to the other vehicles in the scenario, such as more passive or aggressive behavior models. Second, during the application, we derive several virtual worlds from the actual world that have the same initial state. In each of these worlds, we also assign behavior models from the behavior model pool to others. We then evolve these virtual worlds for a defined time-horizon. This enables us to apply counterfactual reasoning by asking what would happen if the actual world evolves as in the virtual world. In uncertain environments, this makes it possible to generate more probable risk estimates and, thus, to enable safer decision-making. We conduct studies using a lane-change scenario that shows the advantages of counterfactual reasoning using learned policies and virtual worlds to estimate their risk and performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge