Using Adapters to Overcome Catastrophic Forgetting in End-to-End Automatic Speech Recognition

Paper and Code

Mar 30, 2022

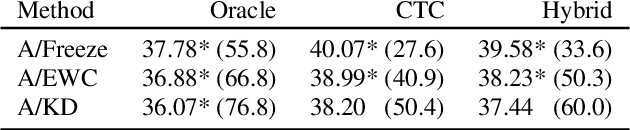

Learning a set of tasks in sequence remains a challenge for Artificial Neural Networks, which, in such scenarios, tend to suffer from Catastrophic Forgetting (CF). The same applies to End-to-End (E2E) Automatic Speech Recognition (ASR) models, even for monolingual tasks. In this paper, we aim to overcome CF for E2E ASR by inserting adapters, small architectures of few parameters which allow a general model to be fine-tuned to a specific task, into our model. We make these adapters task-specific, while either freezing or regularizing the parameters of the model shared by all tasks, thus stimulating the model to fully exploit the adapter parameters while keeping the shared parameters such that they work well for all tasks. Our best method is able to close the gap between worst case (naively fine-tuning) and best case (jointly training on all tasks) by more than 75% after a set of four monolingual tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge