Using a bag of Words for Automatic Medical Image Annotation with a Latent Semantic

Paper and Code

Jun 04, 2013

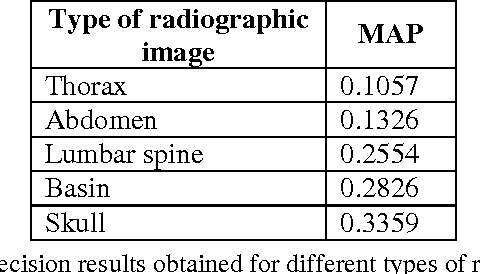

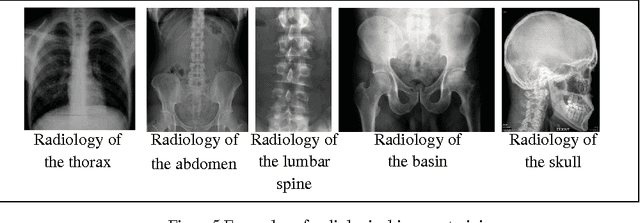

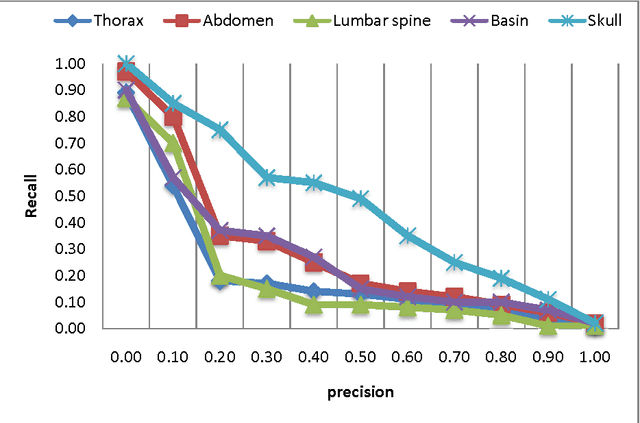

We present in this paper a new approach for the automatic annotation of medical images, using the approach of "bag-of-words" to represent the visual content of the medical image combined with text descriptors based approach tf.idf and reduced by latent semantic to extract the co-occurrence between terms and visual terms. A medical report is composed of a text describing a medical image. First, we are interested to index the text and extract all relevant terms using a thesaurus containing MeSH medical concepts. In a second phase, the medical image is indexed while recovering areas of interest which are invariant to change in scale, light and tilt. To annotate a new medical image, we use the approach of "bagof-words" to recover the feature vector. Indeed, we use the vector space model to retrieve similar medical image from the database training. The calculation of the relevance value of an image to the query image is based on the cosine function. We conclude with an experiment carried out on five types of radiological imaging to evaluate the performance of our system of medical annotation. The results showed that our approach works better with more images from the radiology of the skull.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge