Unveiling Objects with SOLA: An Annotation-Free Image Search on the Object Level for Automotive Data Sets

Paper and Code

Dec 07, 2023

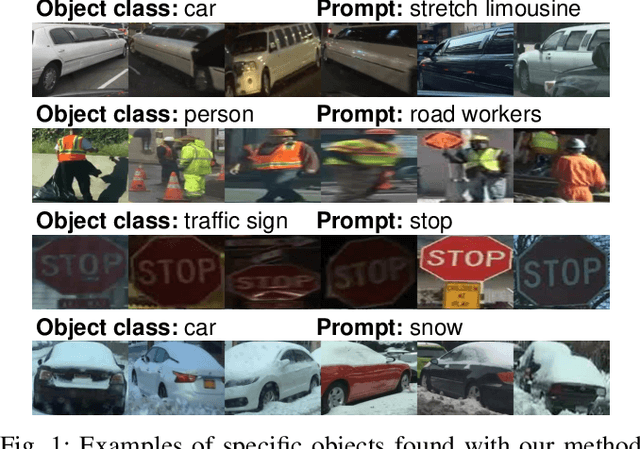

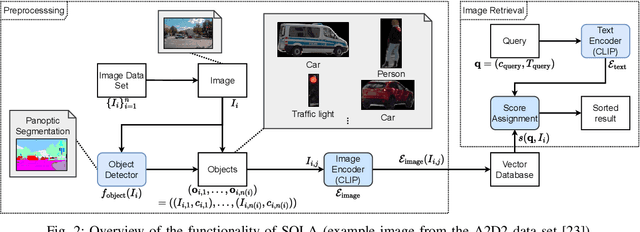

Huge image data sets are the fundament for the development of the perception of automated driving systems. A large number of images is necessary to train robust neural networks that can cope with diverse situations. A sufficiently large data set contains challenging situations and objects. For testing the resulting functions, it is necessary that these situations and objects can be found and extracted from the data set. While it is relatively easy to record a large amount of unlabeled data, it is far more difficult to find demanding situations and objects. However, during the development of perception systems, it must be possible to access challenging data without having to perform lengthy and time-consuming annotations. A developer must therefore be able to search dynamically for specific situations and objects in a data set. Thus, we designed a method which is based on state-of-the-art neural networks to search for objects with certain properties within an image. For the ease of use, the query of this search is described using natural language. To determine the time savings and performance gains, we evaluated our method qualitatively and quantitatively on automotive data sets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge