Unsupervised Human Action Recognition with Skeletal Graph Laplacian and Self-Supervised Viewpoints Invariance

Paper and Code

Apr 21, 2022

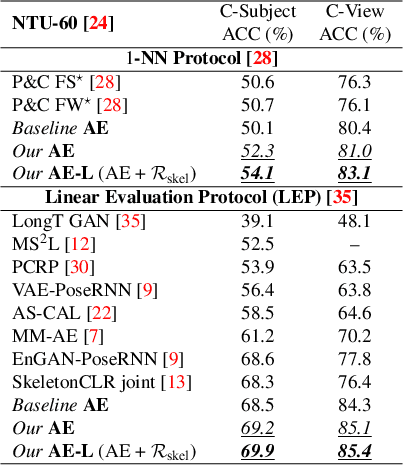

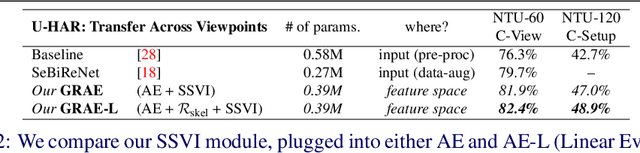

This paper presents a novel end-to-end method for the problem of skeleton-based unsupervised human action recognition. We propose a new architecture with a convolutional autoencoder that uses graph Laplacian regularization to model the skeletal geometry across the temporal dynamics of actions. Our approach is robust towards viewpoint variations by including a self-supervised gradient reverse layer that ensures generalization across camera views. The proposed method is validated on NTU-60 and NTU-120 large-scale datasets in which it outperforms all prior unsupervised skeleton-based approaches on the cross-subject, cross-view, and cross-setup protocols. Although unsupervised, our learnable representation allows our method even to surpass a few supervised skeleton-based action recognition methods. The code is available in: www.github.com/IIT-PAVIS/UHAR_Skeletal_Laplacian

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge