Unsupervised Domain Adaptive Knowledge Distillation for Semantic Segmentation

Paper and Code

Nov 03, 2020

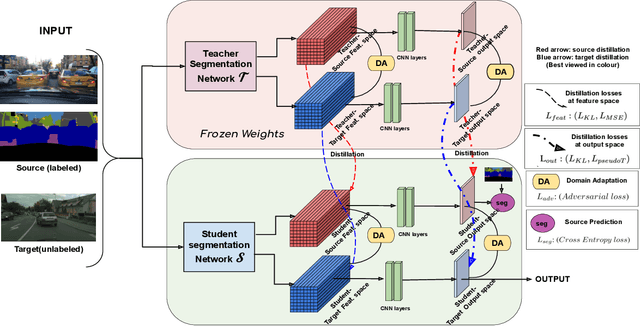

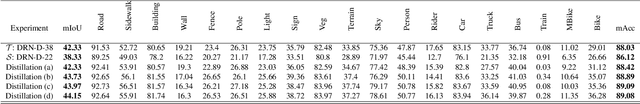

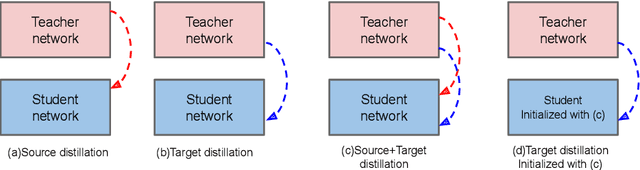

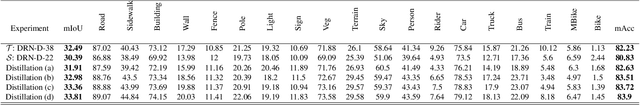

Practical autonomous driving systems face two crucial challenges: memory constraints and domain gap issues. We present an approach to learn domain adaptive knowledge in models with limited memory, thus bestowing the model with the ability to deal with these issues in a comprehensive manner. We delve into this in the context of unsupervised domain-adaptive semantic segmentation and propose a multi-level distillation strategy to effectively distil knowledge at different levels. Further, we introduce a cross entropy loss that leverages pseudo labels from the teacher. These pseudo teacher labels play a multifaceted role towards: (i) knowledge distillation from the teacher network to the student network & (ii) serving as a proxy for the ground truth for target domain images, where the problem is completely unsupervised. We introduce four paradigms for distilling domain adaptive knowledge and carry out extensive experiments and ablation studies on real-to-real and synthetic-to-real scenarios. Our experiments demonstrate the profound success of our proposed method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge