Universal Policies to Learn Them All

Paper and Code

Aug 24, 2019

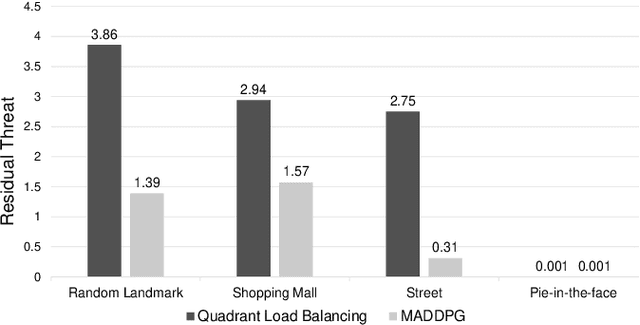

We explore a collaborative and cooperative multi-agent reinforcement learning setting where a team of reinforcement learning agents attempt to solve a single cooperative task in a multi-scenario setting. We propose a novel multi-agent reinforcement learning algorithm inspired by universal value function approximators that not only generalizes over state space but also over a set of different scenarios. Additionally, to prove our claim, we are introducing a challenging 2D multi-agent urban security environment where the learning agents are trying to protect a person from nearby bystanders in a variety of scenarios. Our study shows that state-of-the-art multi-agent reinforcement learning algorithms fail to generalize a single task over multiple scenarios while our proposed solution works equally well as scenario-dependent policies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge