Universal Lipschitz Approximation in Bounded Depth Neural Networks

Paper and Code

Apr 09, 2019

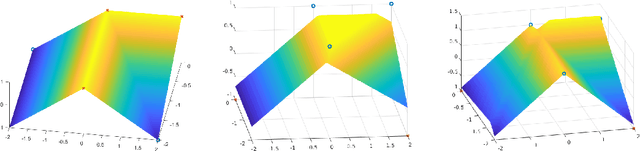

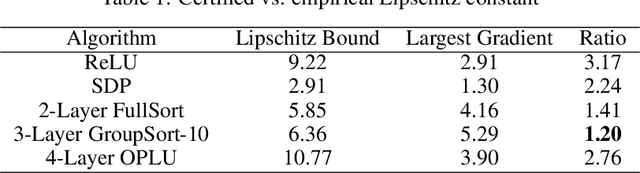

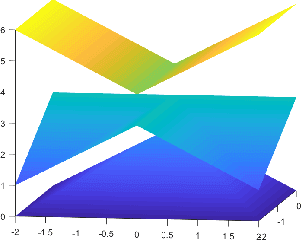

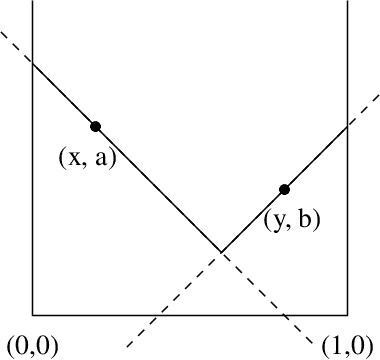

Adversarial attacks against machine learning models are a rather hefty obstacle to our increasing reliance on these models. Due to this, provably robust (certified) machine learning models are a major topic of interest. Lipschitz continuous models present a promising approach to solving this problem. By leveraging the expressive power of a variant of neural networks which maintain low Lipschitz constants, we prove that three layer neural networks using the FullSort activation function are Universal Lipschitz function Approximators (ULAs). This both explains experimental results and paves the way for the creation of better certified models going forward. We conclude by presenting experimental results that suggest that ULAs are a not just a novelty, but a competitive approach to providing certified classifiers, using these results to motivate several potential topics of further research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge