Universal Adversarial Attack on Attention and the Resulting Dataset DAmageNet

Paper and Code

Jan 16, 2020

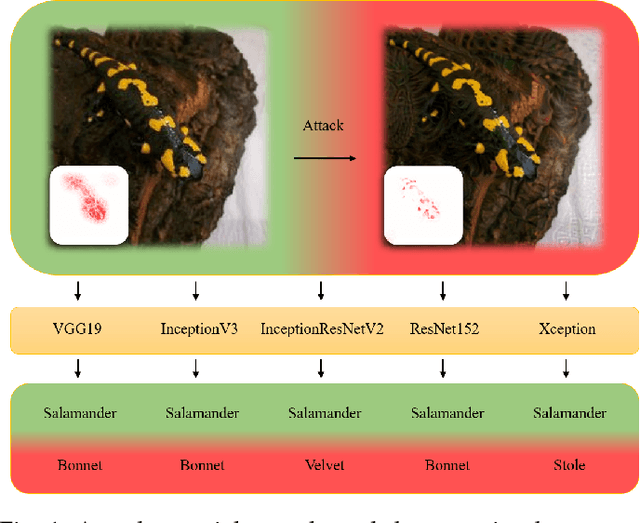

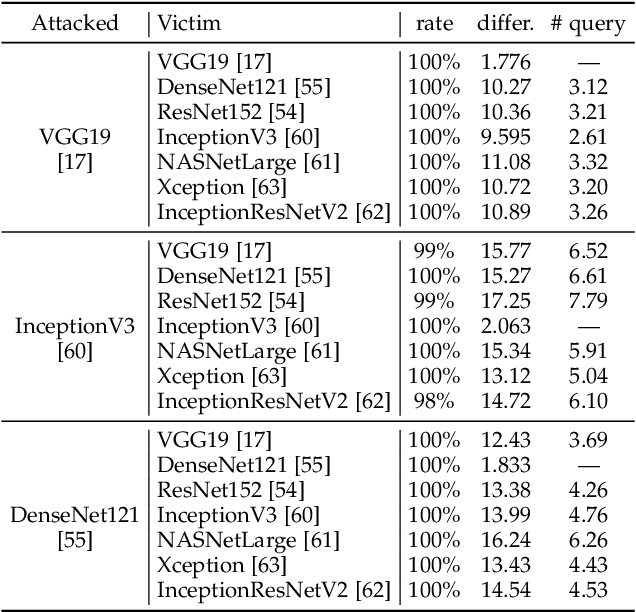

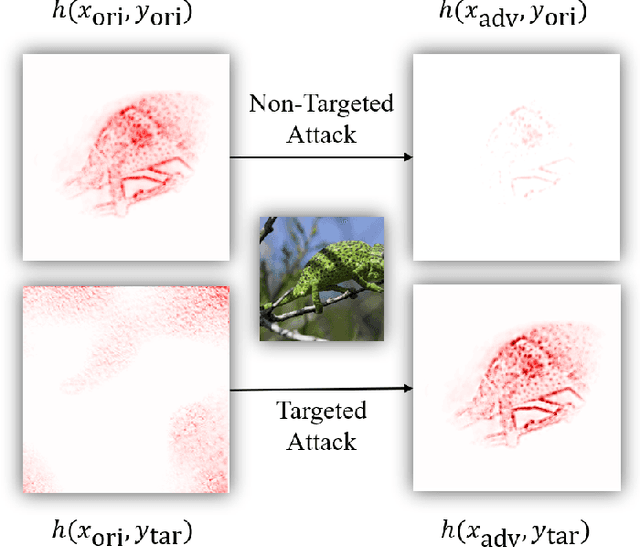

Adversarial attacks on deep neural networks (DNNs) have been found for several years. However, the existing adversarial attacks have high success rates only when the information of the attacked DNN is well-known or could be estimated by structure similarity or massive queries. In this paper, we propose an \emph{Attack on Attention} (AoA), a semantic feature commonly shared by DNNs. The transferability of AoA is quite high. With no more than 10 queries of the decision only, AoA can achieve almost 100\% success rate when attacking on many popular DNNs. Even without query, AoA could keep a surprisingly high attack performance. We apply AoA to generate 96020 adversarial samples from ImageNet to defeat many neural networks, and thus name the dataset as \emph{DAmageNet}. 20 well-trained DNNs are tested on DAmageNet. Without adversarial training, most of the tested DNNs have an error rate over 90\%. DAmageNet is the first universal adversarial dataset and it could serve as a benchmark for robustness testing and adversarial training.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge