UniMiB SHAR: a new dataset for human activity recognition using acceleration data from smartphones

Paper and Code

Aug 08, 2017

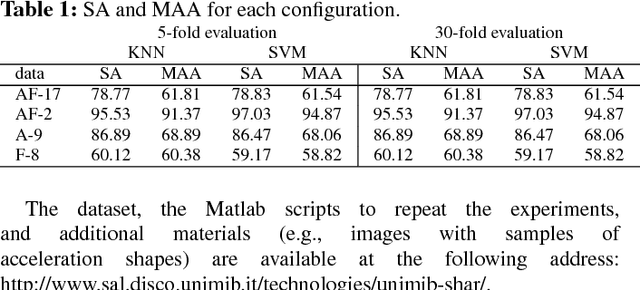

Smartphones, smartwatches, fitness trackers, and ad-hoc wearable devices are being increasingly used to monitor human activities. Data acquired by the hosted sensors are usually processed by machine-learning-based algorithms to classify human activities. The success of those algorithms mostly depends on the availability of training (labeled) data that, if made publicly available, would allow researchers to make objective comparisons between techniques. Nowadays, publicly available data sets are few, often contain samples from subjects with too similar characteristics, and very often lack of specific information so that is not possible to select subsets of samples according to specific criteria. In this article, we present a new dataset of acceleration samples acquired with an Android smartphone designed for human activity recognition and fall detection. The dataset includes 11,771 samples of both human activities and falls performed by 30 subjects of ages ranging from 18 to 60 years. Samples are divided in 17 fine grained classes grouped in two coarse grained classes: one containing samples of 9 types of activities of daily living (ADL) and the other containing samples of 8 types of falls. The dataset has been stored to include all the information useful to select samples according to different criteria, such as the type of ADL, the age, the gender, and so on. Finally, the dataset has been benchmarked with four different classifiers and with two different feature vectors. We evaluated four different classification tasks: fall vs no fall, 9 activities, 8 falls, 17 activities and falls. For each classification task we performed a subject-dependent and independent evaluation. The major findings of the evaluation are the following: i) it is more difficult to distinguish between types of falls than types of activities; ii) subject-dependent evaluation outperforms the subject-independent one

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge