Unified Distributed Environment

Paper and Code

May 14, 2022

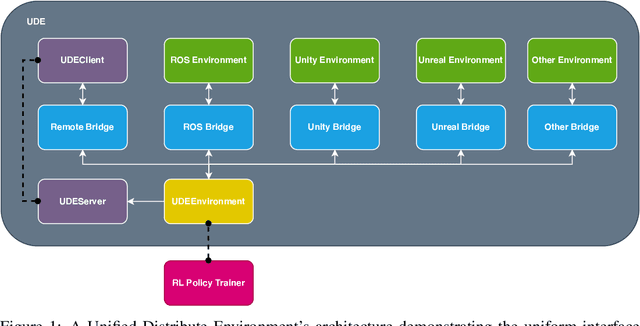

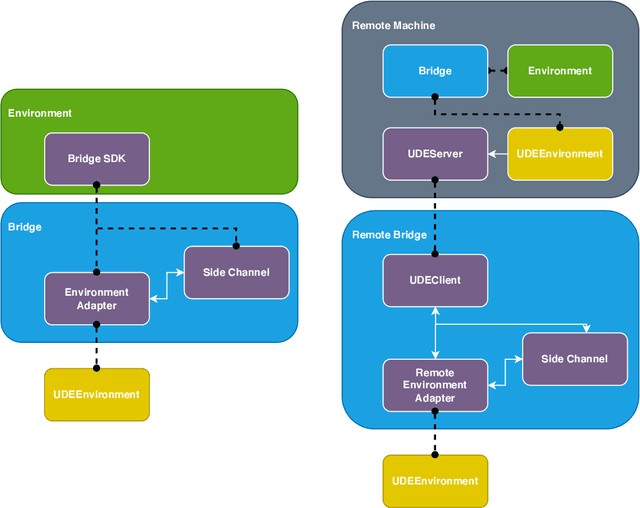

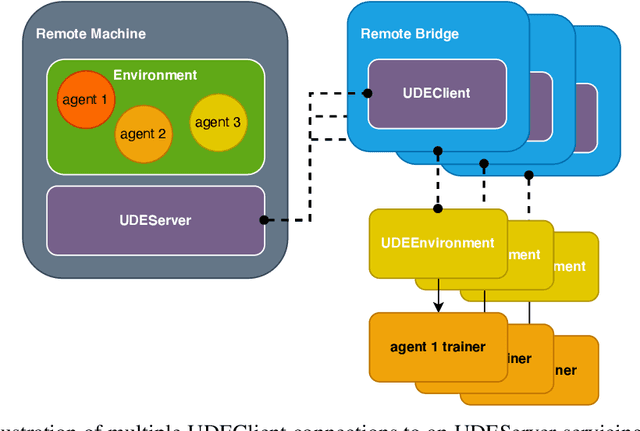

We propose Unified Distributed Environment (UDE), an environment virtualization toolkit for reinforcement learning research. UDE is designed to integrate environments built on any simulation platform such as Gazebo, Unity, Unreal, and OpenAI Gym. Through environment virtualization, UDE enables offloading the environment for execution on a remote machine while still maintaining a unified interface. The UDE interface is designed to support multi-agent by default. With environment virtualization and its interface design, the agent policies can be trained in multiple machines for a multi-agent environment. Furthermore, UDE supports integration with existing major RL toolkits for researchers to leverage the benefits. This paper discusses the components of UDE and its design decisions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge