Uncertainty Quantification in Extreme Learning Machine: Analytical Developments, Variance Estimates and Confidence Intervals

Paper and Code

Nov 03, 2020

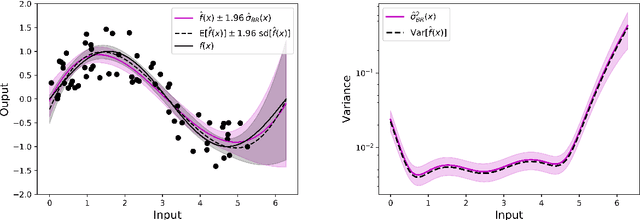

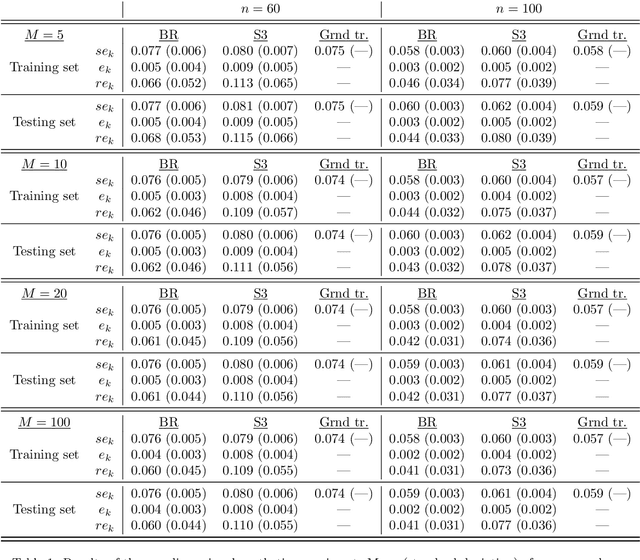

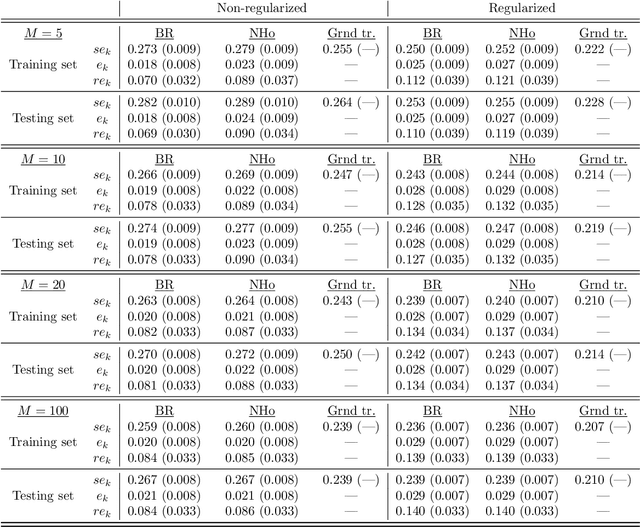

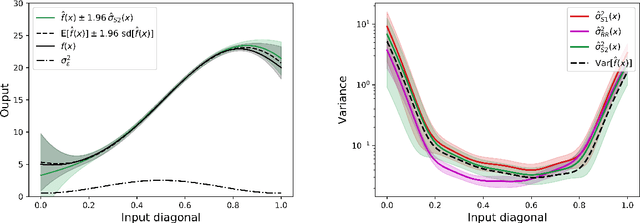

Uncertainty quantification is crucial to assess prediction quality of a machine learning model. In the case of Extreme Learning Machines (ELM), most methods proposed in the literature make strong assumptions on the data, ignore the randomness of input weights or neglect the bias contribution in confidence interval estimations. This paper presents novel estimations that overcome these constraints and improve the understanding of ELM variability. Analytical derivations are provided under general assumptions, supporting the identification and the interpretation of the contribution of different variability sources. Under both homoskedasticity and heteroskedasticity, several variance estimates are proposed, investigated, and numerically tested, showing their effectiveness in replicating the expected variance behaviours. Finally, the feasibility of confidence intervals estimation is discussed by adopting a critical approach, hence raising the awareness of ELM users concerning some of their pitfalls. The paper is accompanied with a scikit-learn compatible Python library enabling efficient computation of all estimates discussed herein.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge