Uncertainty-based method for improving poorly labeled segmentation datasets

Paper and Code

Feb 16, 2021

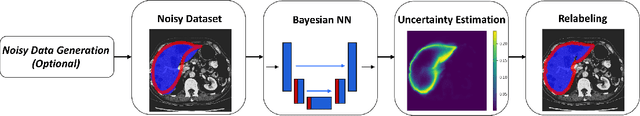

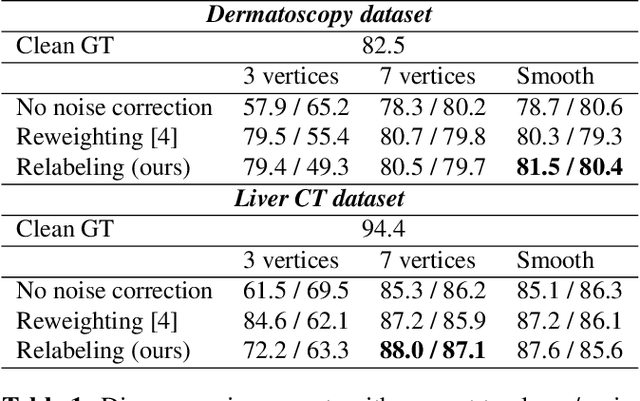

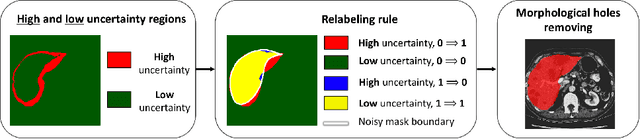

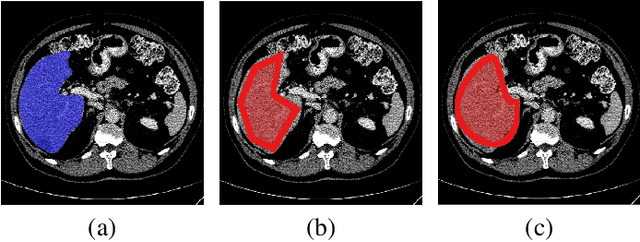

The success of modern deep learning algorithms for image segmentation heavily depends on the availability of large datasets with clean pixel-level annotations (masks), where the objects of interest are accurately delineated. Lack of time and expertise during data annotation leads to incorrect boundaries and label noise. It is known that deep convolutional neural networks (DCNNs) can memorize even completely random labels, resulting in poor accuracy. We propose a framework to train binary segmentation DCNNs using sets of unreliable pixel-level annotations. Erroneously labeled pixels are identified based on the estimated aleatoric uncertainty of the segmentation and are relabeled to the true value.

* Accepted to ISBI 2021

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge