Ultradense Word Embeddings by Orthogonal Transformation

Paper and Code

May 08, 2016

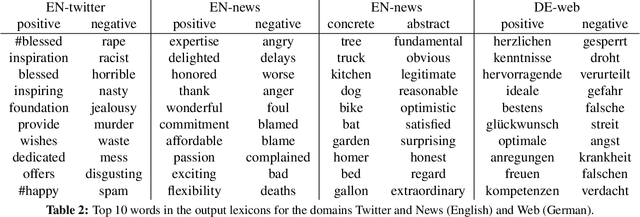

Embeddings are generic representations that are useful for many NLP tasks. In this paper, we introduce DENSIFIER, a method that learns an orthogonal transformation of the embedding space that focuses the information relevant for a task in an ultradense subspace of a dimensionality that is smaller by a factor of 100 than the original space. We show that ultradense embeddings generated by DENSIFIER reach state of the art on a lexicon creation task in which words are annotated with three types of lexical information - sentiment, concreteness and frequency. On the SemEval2015 10B sentiment analysis task we show that no information is lost when the ultradense subspace is used, but training is an order of magnitude more efficient due to the compactness of the ultradense space.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge