UltraBots: Large-Area Mid-Air Haptics for VR with Robotically Actuated Ultrasound Transducers

Paper and Code

Oct 04, 2022

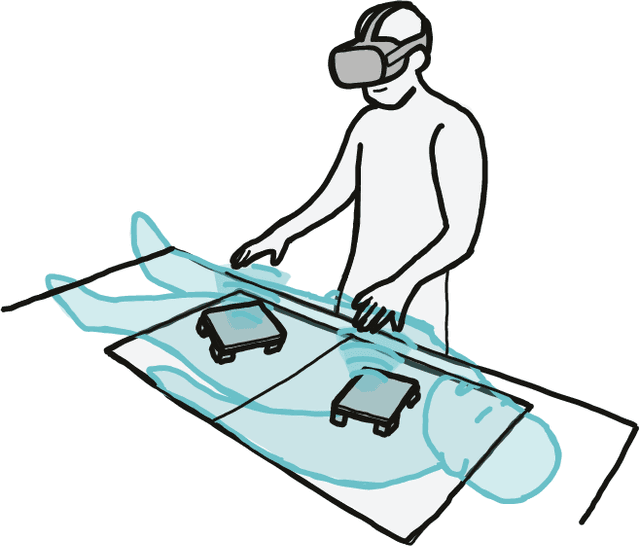

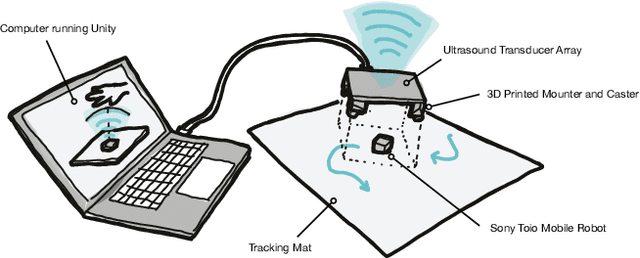

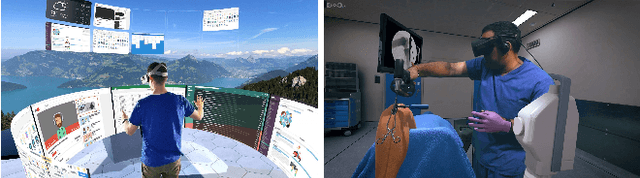

We introduce UltraBots, a system that combines ultrasound haptic feedback and robotic actuation for large-area mid-air haptics for VR. Ultrasound haptics can provide precise mid-air haptic feedback and versatile shape rendering, but the interaction area is often limited by the small size of the ultrasound devices, restricting the possible interactions for VR. To address this problem, this paper introduces a novel approach that combines robotic actuation with ultrasound haptics. More specifically, we will attach ultrasound transducer arrays to tabletop mobile robots or robotic arms for scalable, extendable, and translatable interaction areas. We plan to use Sony Toio robots for 2D translation and/or commercially available robotic arms for 3D translation. Using robotic actuation and hand tracking measured by a VR HMD (e.g., Oculus Quest), our system can keep the ultrasound transducers underneath the user's hands to provide on-demand haptics. We demonstrate applications with workspace environments, medical training, education and entertainment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge